[Перевод] Bypassing LinkedIn Search Limit by Playing With API

[Because my extension got a lot of attention from the foreign audience, I translated my original article into English].

Limit

Being a top-rated professional network, LinkedIn, unfortunately, for free accounts, has such a limitation as Commercial Use Limit (CUL). Most likely, you, same as me until recently, have never encountered and never heard about this thing.

The point of the CUL is that when you search people outside your connections/network too often, your search results will be limited with only 3 profiles showing instead of 1000 (100 pages with 10 profiles per page by default). How «often» is measured nobody knows, there are no precise metrics; the algorithm decides it based on your actions — how frequently you«ve been searching and how many connections you«ve been adding. The free CUL resets at midnight PST on the 1st of each calendar month, and you get your 1000 search results again, for who knows how long. Of course, Premium accounts have no such limit in place.

However, not so long ago, I«ve started messing around with LinkedIn search for some pet-project, and suddenly got stuck with this CUL. Obviously, I didn«t like it that much; after all, I haven«t been using the search for any commercial purposes. So, my first thought was to explore this limit and try to bypass it.

[Important clarification — all source materials in this article are presented solely for informational and educational purposes. The author doesn’t encourage their use for commercial purposes.]

Studying the Problem

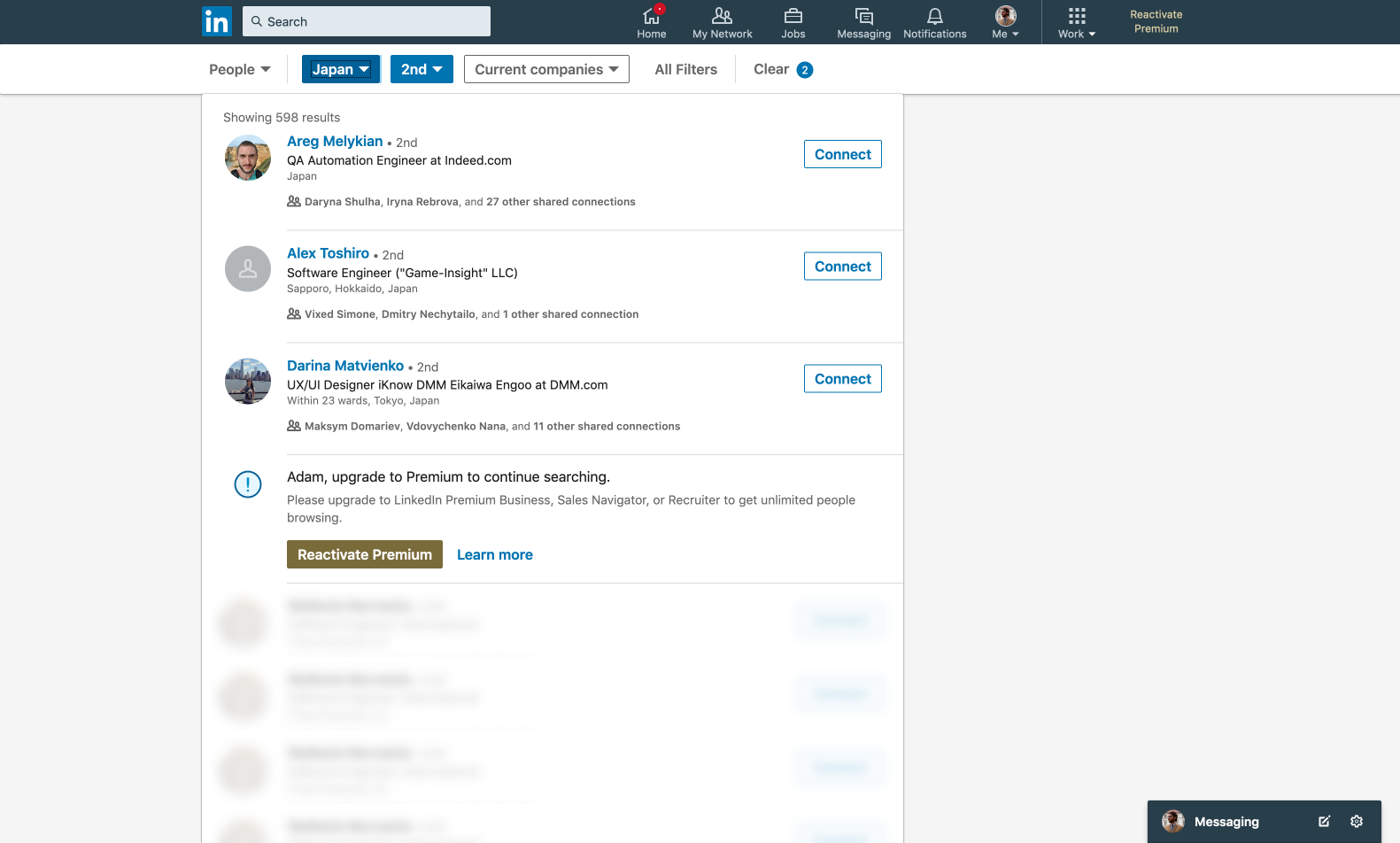

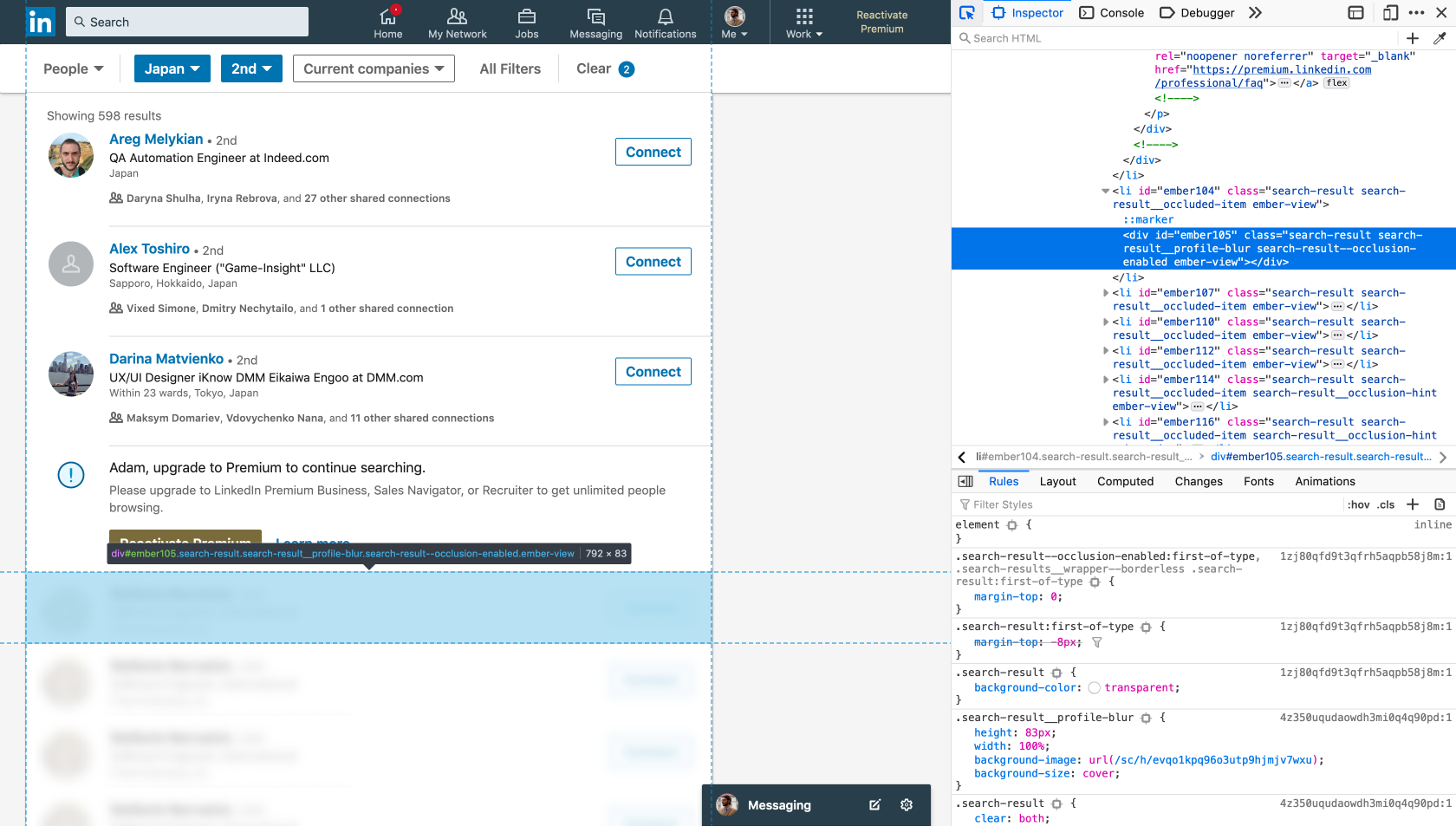

What we got: instead of 10 profiles with pagination the search tool shows only 3, which are followed by a «recommendation» to buy a Premium account and then you see other blurred unclickable profiles.

The first though you have as developer is to open Browser Developer Tools to examine those hidden/blurred profiles — maybe you could remove some styles responsible for the blurring, or extract the data from the layout/markup block. But, as could be expected, these profiles are shown as just the placeholder images and do not contain any data.

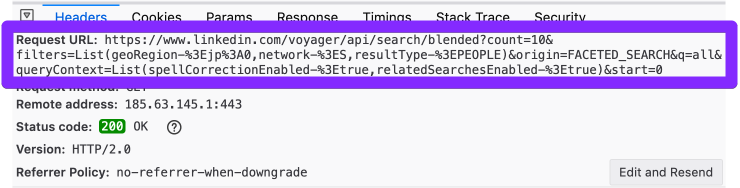

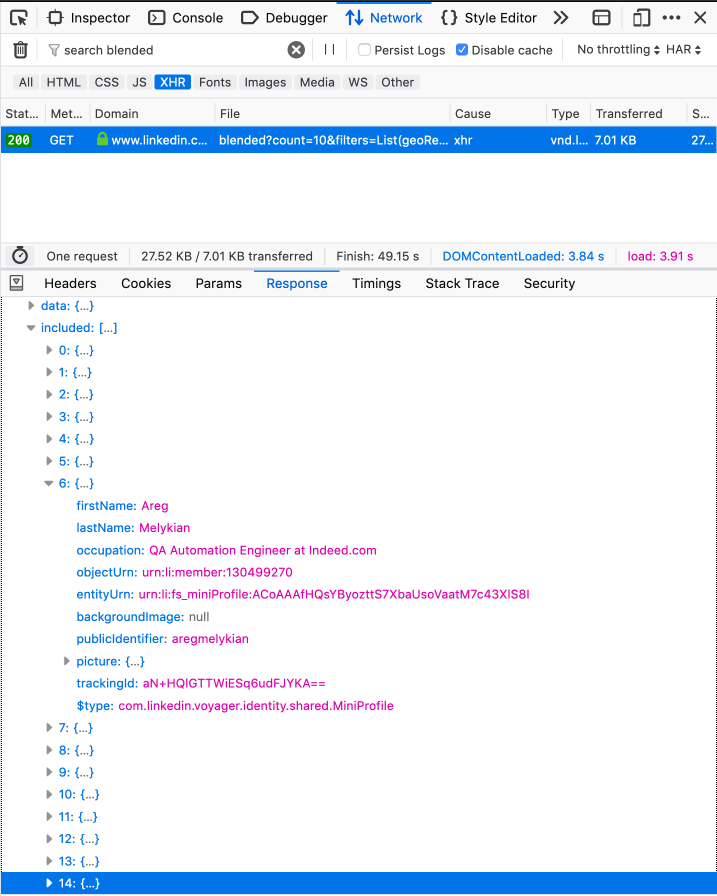

Okay, now let«s go to a Network tab and check if the alternative search results really return only 3 profiles. So, we find the query we«re interested in — »/api/search/blended» — and look at the response.

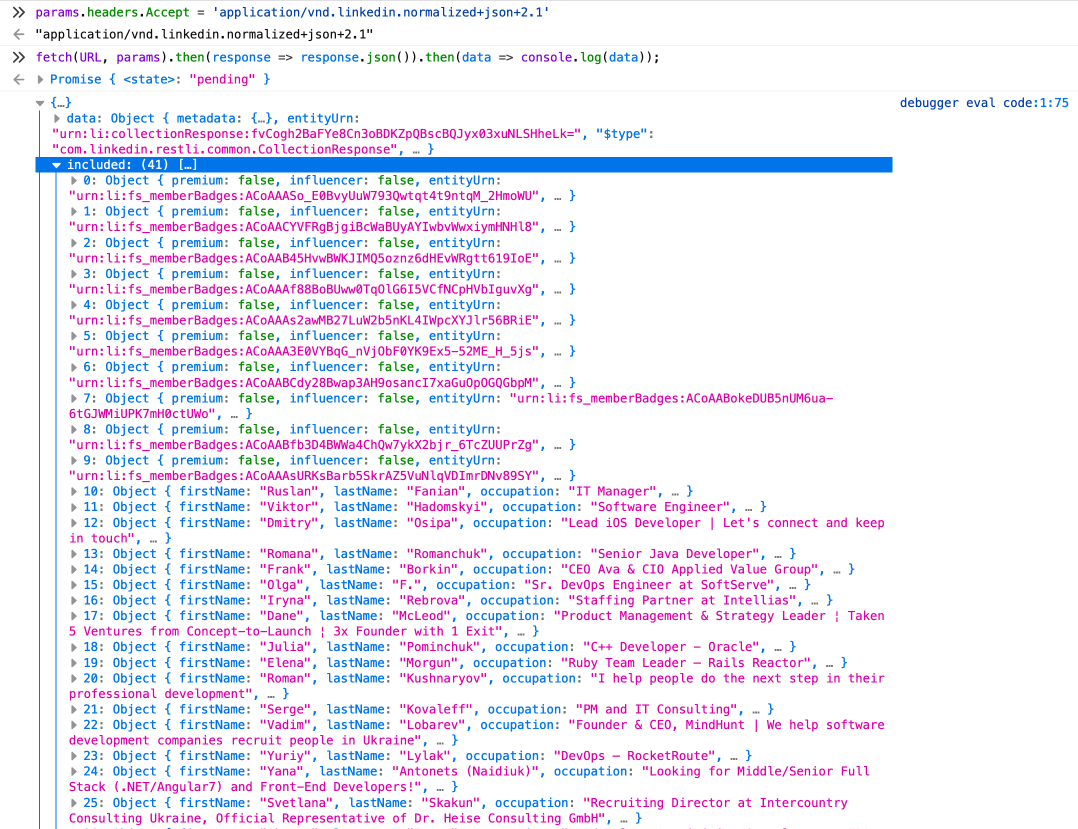

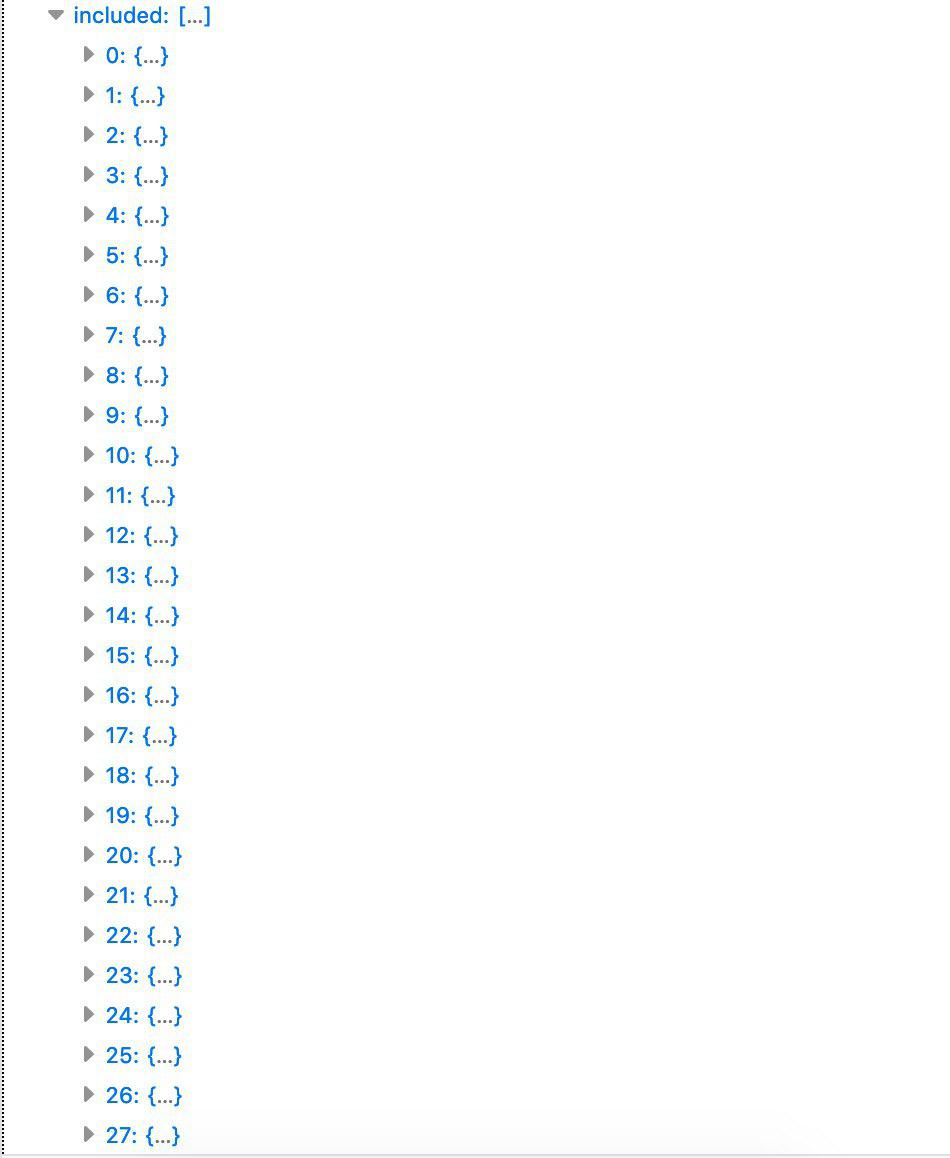

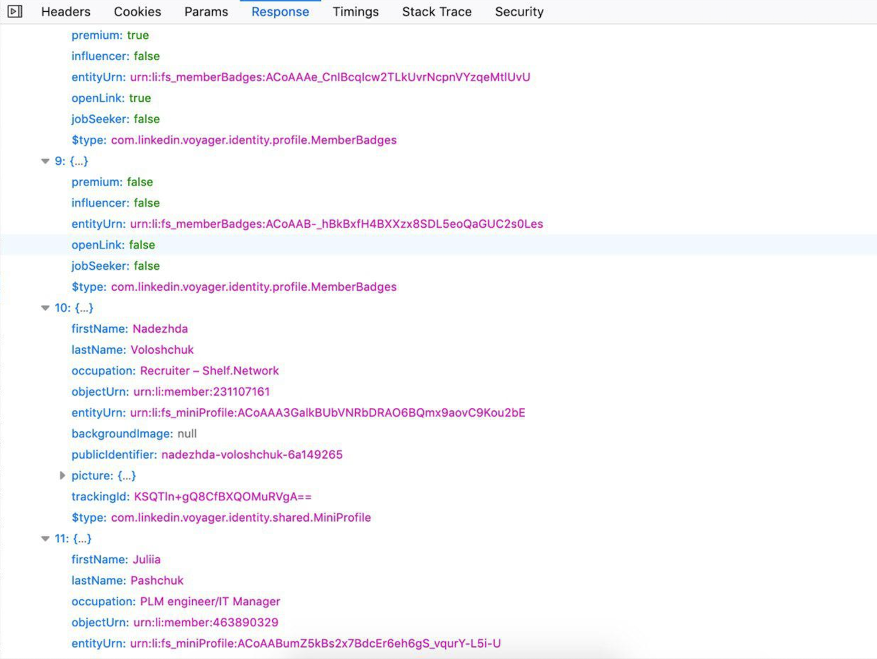

Profiles are contained in the `included` array, but there are also already 15 entities in it. In this case, the first 3 of them are the objects with additional data — each object contains information on a particular profile (for example, if the profile is a Premium one).

The next 12 profiles are real — it«s actually those search results, of which only 3 will be shown to us. As you can already guess, LinkedIn displays only those profiles that received the additional information (the first 3 objects). For example, if you explore the search response from the profile without a limit, 28 entities will be returned — 10 objects with additional info and 18 profiles.

I’m not sure yet why there are more than 10 profiles returned, while 10 are requested, and they aren«t displayed to a user — you won«t see them even on the next page. If you analyze the request URL, you«d see that count = 10 (how many profiles to return in the response, 49 is the max.).

Any comments on this subject would be much appreciated.

Experimenting

Okay, now we know the most important thing for sure — response comes with more profiles than LinkedIn shows us. So, we can obtain more data, despite the CUL. Let’s try to pull the API ourselves right from the console, using fetch.

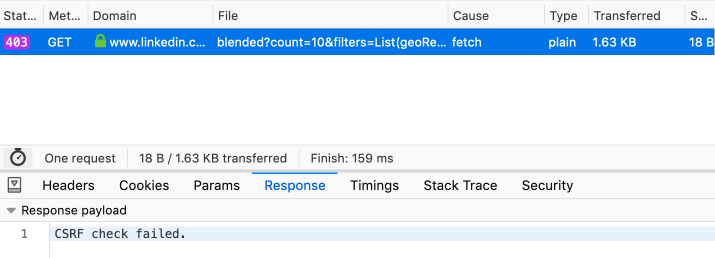

As expected, we get the 403 error. This is a security issue — here we don«t send a CSRF token (CSRF on Wikipedia). In a nutshell, it«s a unique token that is added to each request, which is checked on the server-side for authenticity.

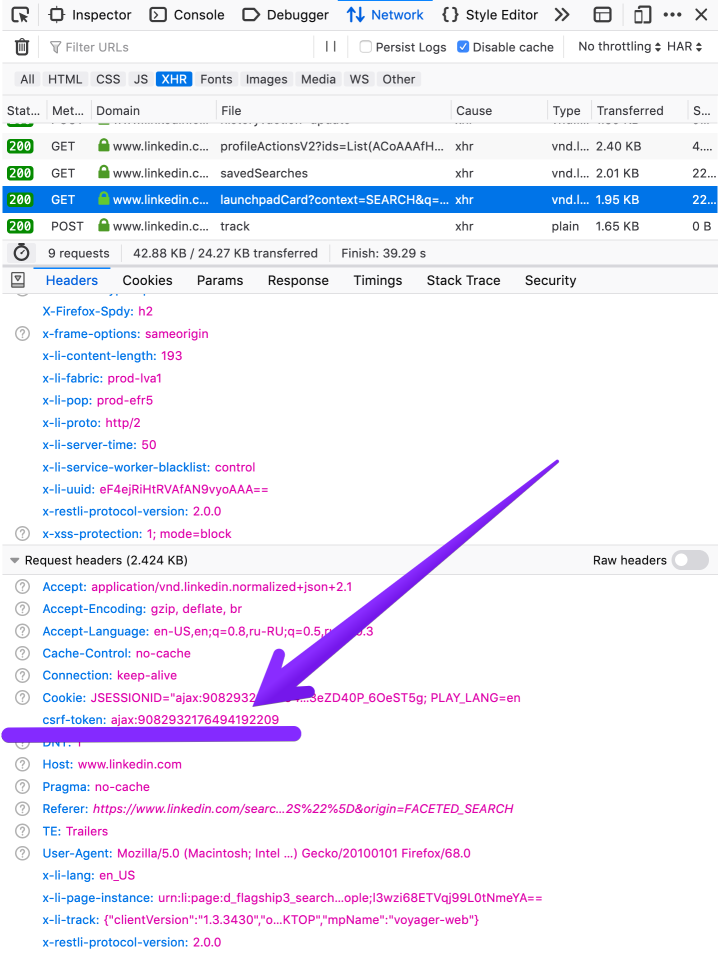

You can copy it from any other successful request or from cookies, where it«s stored in the 'JSESSIONID' field.

From the cookies, right through console:

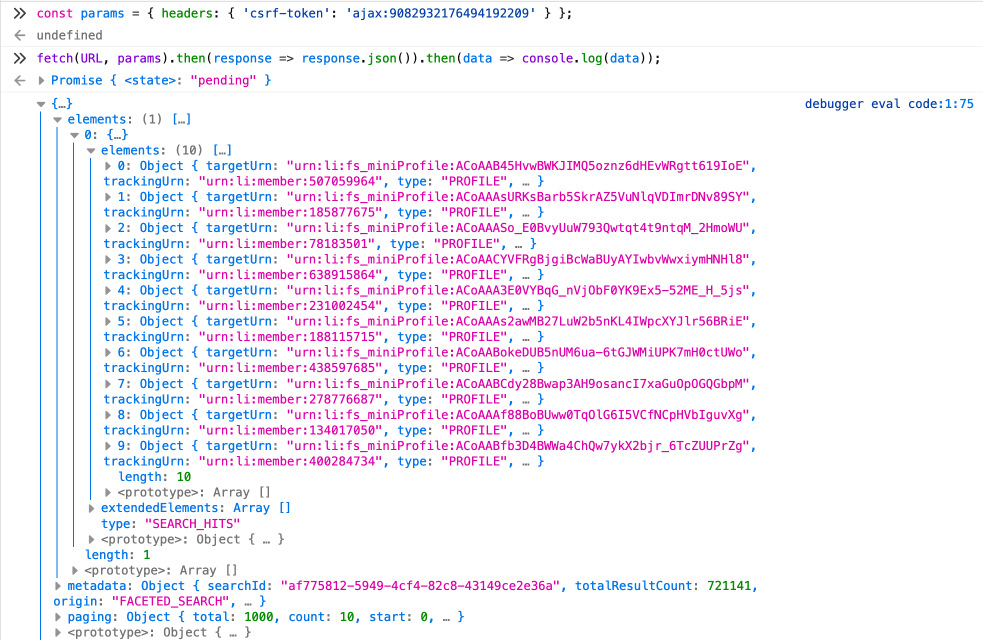

Let«s try again; this time, we pass the settings to fetch. In the settings, we specify our CSRF token as a parameter in the header.

Success, all 10 profiles are returned. : tada:

Because of the difference in the headers, the response structure slightly differs from what comes in the original request. You can get the same exact structure if you add 'Accept:' application/vnd.linkedin.normalized+json+2.1' into our object, next to the CSRF token.Sample of a response with the added header

More about the Accept Header

What’s Next?

Now you can edit (manually or automatically) the `start` parameter that specifies the index, starting from which we can receive 10 profiles (by default = 0) from the entire search result. In other words, incrementing it by 10 after each request, we get our usual pagination, 10 profiles per page.

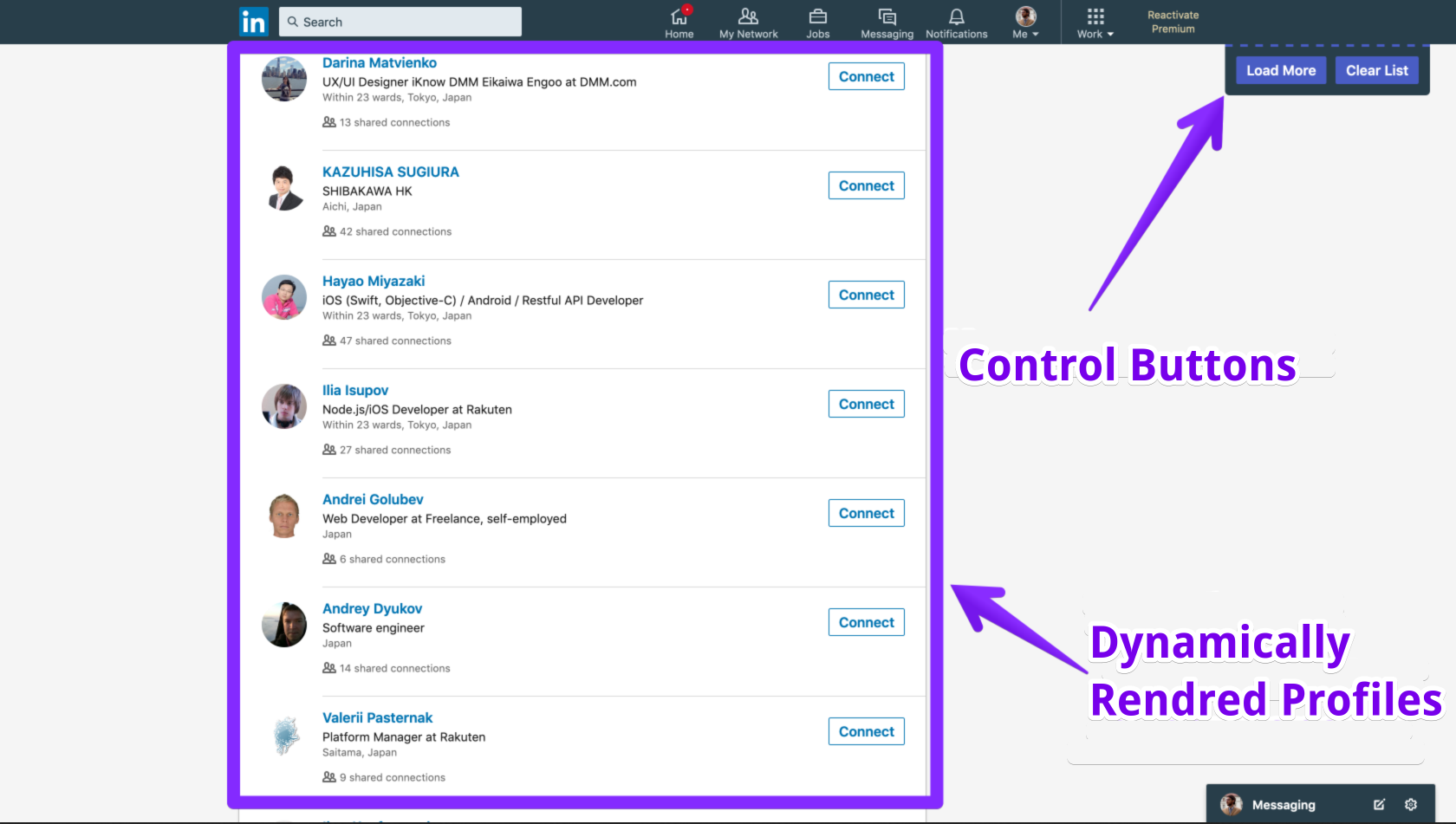

At this stage, I«ve got enough data and freedom to continue working on the pet-project. But it would be a sin not to try to display this data right away since it was in my hands. We won«t dive into the Ember used for the frontend. As the JQuery was integrated on the website, you can dig out from your memory the knowledge of the basic syntax, and create the following thing in a couple of minutes.

/* render the block, receive profile data, and insert the block in the profiles’ list, using this data */

const createProfileBlock = ({ headline, publicIdentifier, subline, title }) => {

$('.search-results__list').append(

`

`

);

};

// fetch data and render the profiles

const fetchProfiles = () => {

// token

const csrf = 'ajax:9082932176494192209';

// оbject with the request settings, pass the token

const settings = { headers: { 'csrf-token': csrf } }

// request URL, with a dynamic start index at the end

const url = `https://www.linkedin.com/voyager/api/search/blended?count=10&filters=List(geoRegion-%3Ejp%3A0,network-%3ES,resultType-%3EPEOPLE)&origin=FACETED_SEARCH&q=all&queryContext=List(spellCorrectionEnabled-%3Etrue,relatedSearchesEnabled-%3Etrue)&start=${nextItemIndex}`;

/* make a request, for each profile in the response call the block rendering, and then increment the starting index by 10 */

fetch(url, settings).then(response => response.json()).then(data => {

data.elements[0].elements.forEach(createProfileBlock);

nextItemIndex += 10;

});

};

// delete all profiles from the list

$('.search-results__list').find('li').remove();

// insert the ‘download profiles’ button

$('.search-results__list').after('');

// add the functionality to the button

$('#load-more').addClass('artdeco-button').on('click', fetchProfiles);

// set the default profile index for the request

window.nextItemIndex = 0;

If you do this actions directly in the console on the search page, you«ll add a button that loads 10 new profiles each time you click on it, and renders them as a list. Of course, you«ll have to change a token and URL appropriately to the necessary ones. The profile block will contain a name, job position, location, a link to the profile, and a placeholder image.

Conclusion

Therefore, with a minimum of effort, we were able to find a weak spot and regain our search option without any limitations. It was enough to analyze the data and its path and look into the query itself.

I can«t say that this is a serious problem for LinkedIn because it represents no threat. Worst that could happen is a loss of profit because of such «bypassing,» which allows you not to buy a Premium account. Perhaps, such server«s response is necessary for the other parts of the website to work correctly, or this is simply the laziness of the developers lack of resources, which does not allow doing better (The CUL appeared in January 2015, before there was no limit at all).

P. S.

I«m still working on this extension, and I plan to make it publicly available.

Message me if you«re interested.

By popular demand to release this extension as open-source product, I created a browser extension and posted it for public use (free and even without miners). It has not only the limit bypass functionality but also some other features. You can have a look at it and download it here — adam4leos.github.io

Since this is an alpha version, don«t hesitate to notify me about bugs, your ideas, or even about a

stoned cool UI. I keep improving the extension and will post new versions from time to time.