Testing SQL Server code with tSQLt

FYI: this article is an expanded version of my talk at SQA Days #25.

Based on my experience with colleagues, I can state: DB code testing is not a widely spread practice. This can be potentially dangerous. DB logic is written by human beings just like all other «usual» code. So, there can be failures which can cause negative consequences for a product, business or users. Whether these are stored procedures helping backend or it is ETL modifying data in a warehouse — there is always a risk and testing helps to decrease it. I want to tell you what tSQLt is and how it helps us to test DB code.

There is a big warehouse using SQL Server and containing different clinical trial data. It is filled from a variety of sources (document-oriented databases mainly). A lot of ETLs transform data within the warehouse on many occasions. This data can be loaded into smaller DBs for using by web apps oriented on small specific tasks. Some of the client«s customers asked to implement APIs for their needs. Such APIs often use stored procedures and different queries.

In general, there is a pretty big amount of code on the DBMS side.

As you can see from the introduction, DB code is part of the application code and it can contain bugs too.

I guess a lot of us are familiar with Boehm«s Curve: bugs are always more expensive to fix later on in the process. An error which was made on an earlier development stage and localized on a later one can cost more. This is due to the necessity of going through a lot of interim steps (coding, unit-testing, integration testing, system testing, etc.) twice: for debugging and for returning the code to the stage where it was found. This effect is true for the warehouse case too. If there is an error in an ETL procedure and the data is modified multiple times, we must:

- go through all the data transformation steps back to the source of the problem

- fix the problem

- derive the proper data again (additional manual edits may be required)

- make sure there is no other broken data caused by the bug.

Don«t forget that we don«t sell soft toys. A mistake in such area as clinical trials may harm not only business but also human health.

Since we«re talking about code testing, we mean unit and integration testing. These things are very repetitive and imply persistent regression. Strictly speaking, such testing is never done manually (well, except some singular cases probably).

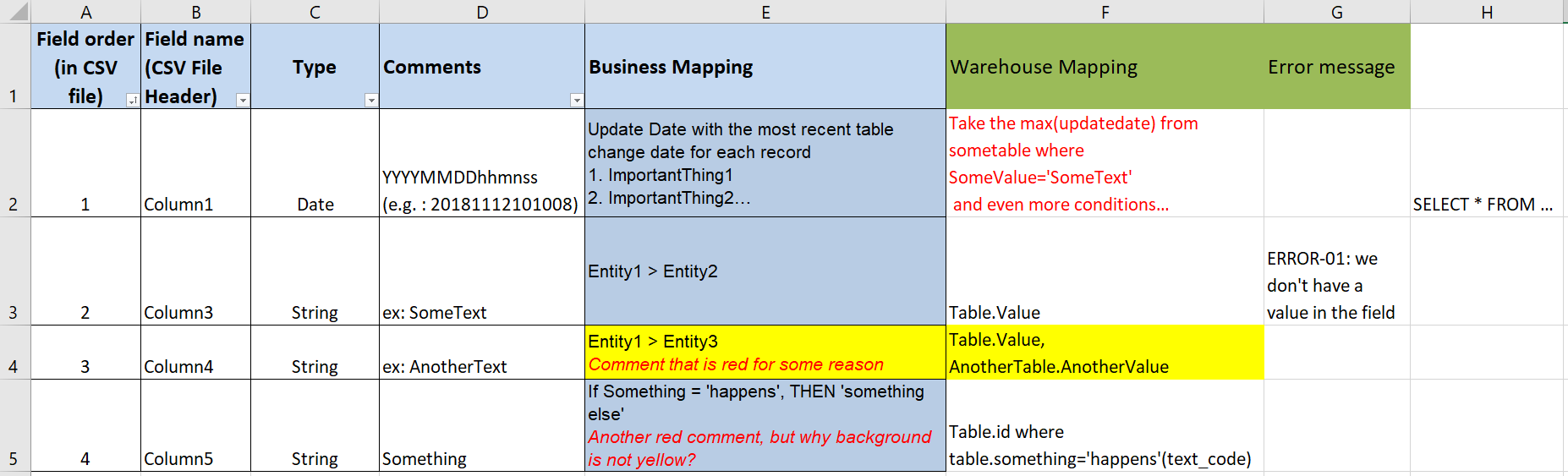

Nice bonus: tests can be supporting materials for code documenting. For example, requirements may look like this (clickable):

XLS file, 2 columns with requirements + fragmented additional information in other columns + confusing markup. It can be difficult to restore the initial wishes if necessary. Tests can help to record implementation nuances. Of course, they shouldn«t be considered as a replacement for documentation.

Unfortunately, testing complexity increases with the code complexity growth, so, this effect can be smoothed over.

Tests can be an additional security layer against spontaneous merges. CI auto tests help with this problem because of their formalism.

So, if we have decided to use automation, we need to choose a tool for it.

In case of DB code testing, I see 2 approaches: SQL-powered (when a tool is functioning in DBMS directly) and non-SQL-powered. Here are the main differences I found:

In case of SQL Server, we have several choices:

The scale «Excellent — Failing» is subjective, sorry, it«s hard to find a way around.

«First appearance» — the earliest date of framework appearance which I could find — the earliest release or commit.

As you can see, SQL-powered alternatives were abandoned quite a long time ago, and tSQLt is the only currently supported product. Besides, tSQLt wins functionally. The only thing is, T.S. T. boasts a bit richer set of assertions than tSQLt; however, I doubt this can outweigh all cons.

The tSQLt documentation has some nuances, I«ll describe them later.

In the non-SQL-powered world, things are not so clear. Alternatives are developing, although not super-actively. DbFit is a pretty interesting tool based on the FitNesse framework. It implies using wiki markup for writing tests. Slacker is an interesting one too: BDD approach is suggested for DB code testing.

I should say about assertions in non-SQL-powered solutions. At first sight, the amount of assertions is less, and we can think that such tools are worse. But we should keep in mind that they are fundamentally different from tSQLt, so, such superficial glance is incorrect.

The latest row — «NUnit, etc.» — is more like a reminder. A lot of usual unit-testing frameworks may be applied to the DB code with the help of additional libraries. There are a lot of N/A«s in this row because this row, in fact, includes multiple tools. That is the source of «nuances» in the «assertions» column — different tools can provide different sets and there is no guarantee that all assertions can be applied to DB.

As another interesting metric, we can consider Google trends.

Nuances:

- I decided not to include Slacker because this name can mean different things (and queries like «Slacker framework» are barely seen on the graph).

- Just out of curiosity (and because one slot remained empty), I«ve added the T.S. T. trend. But it hardly shows us the real picture because it«s abbreviation which can mean different things too.

- I haven«t included NUnit and its analogues. These tools are frameworks for «usual» code testing, so their trends are not descriptive for our context.

As you can see, tSQLt is the most searchable tool on the list. Another (less) popular tool is DbFit. Other tools have limited popularity.

By and large, we can see that tSQLt shines against the background.

It is easy to guess that tSQLt is a SQL-powered unit-testing framework. The official site is https://tsqlt.org.

It is promised that tSQLt supports SQL Server starting from 2005 SP2. I haven«t checked such early revisions, but I don«t see any problems with 2012 on our dev-server and 2017 on my local machine.

Open source, Apache 2.0 license, available on GitHub. As usual, we can fork, contribute, use for free in commercial projects and, what«s more important, need not be afraid of spyware in CLR.

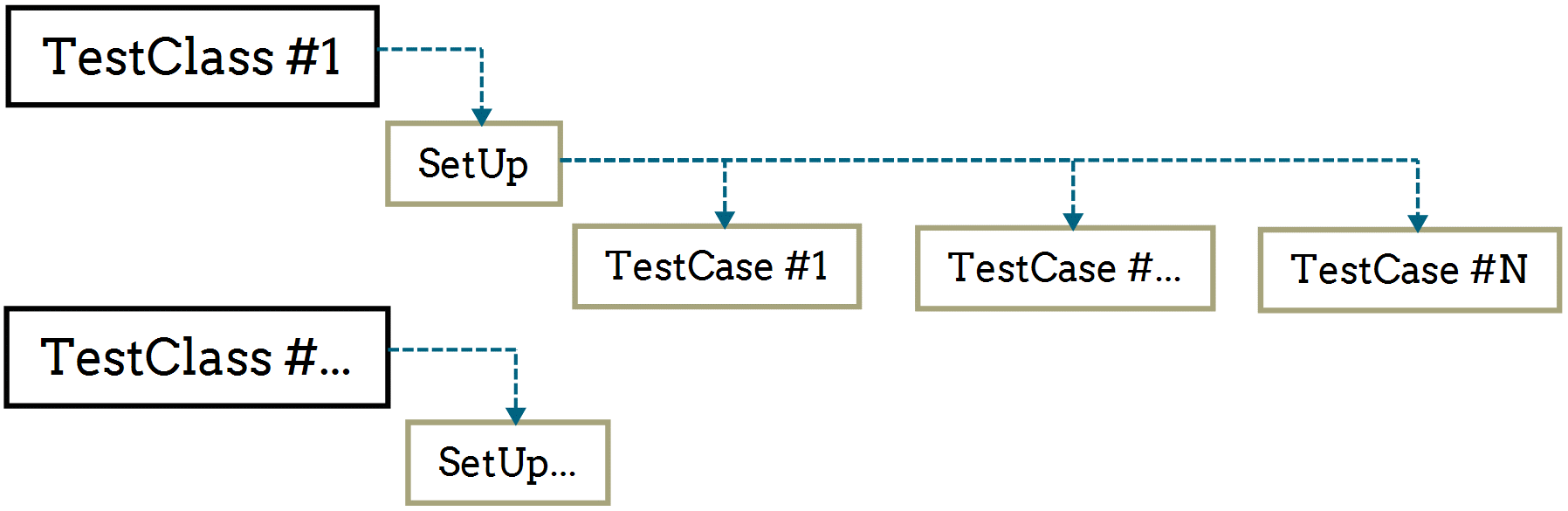

Test cases are stored procedures. They can be combined into test classes (test suite in xUnit terminology).

Test classes are nothing else but DB schemas. tSQLt requires to register them with the NewTestClass procedure which adds test classes to a special table.

It«s possible to determine a SetUp procedure. Such procedure will run before each separated test case run.

Teardown-procedure after test case run is not required. Each test case with its SetUp is run in a separated transaction which is rolled back after results gathering. It«s very convenient but has some negative consequences — I«ll describe them a bit later.

The framework allows running test cases one at a time, the whole test classes at once or even all registered test classes with one single command.

Unwilling to repeat the official guide, I will show tSQLt features on examples.

Disclaimer:

- examples are simplified

- the original code is not completely mine — it«s rather collective creations

- example 2 is fictionalized by me in order to demonstrate features more fully.

Example #1: CsvSql

The following was implemented upon the request of one of the client«s customers. There are SQL-queries stored in Nvarchar (MAX) fields. Minimal UI is created for viewing them. Result sets generated by these queries are used in backend for further composing as CSV-files. The CSV-files can be requested by an API call.

Result sets are big and contain a huge number of columns. A hypothetical example of such result set:

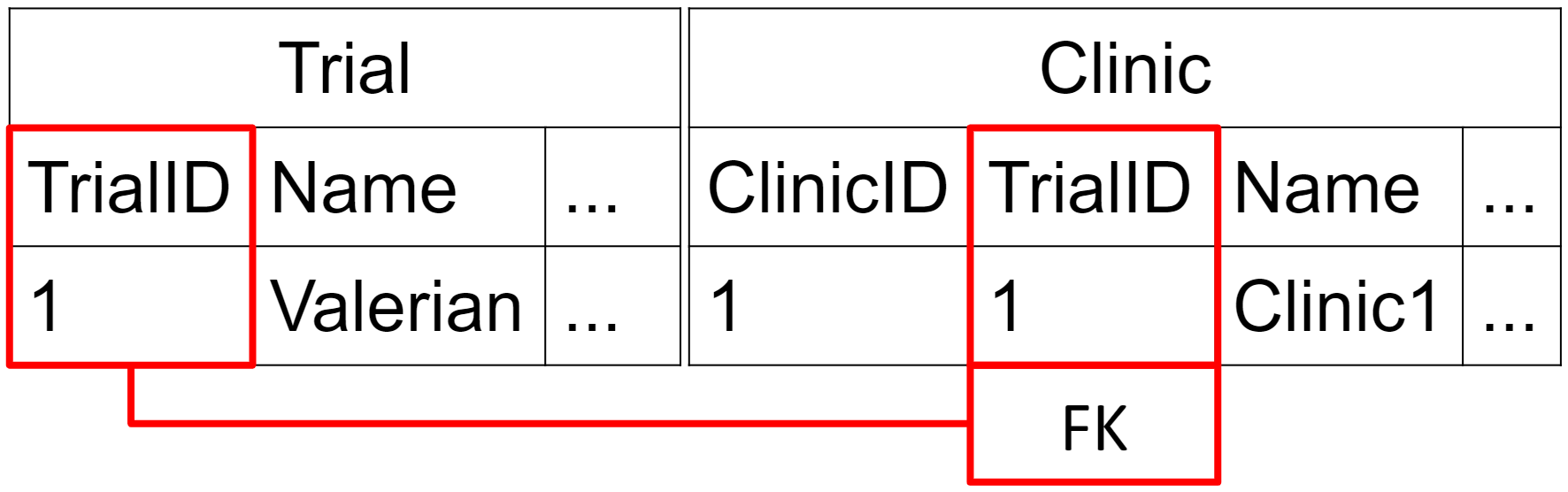

This result set represents clinical trial data. Let«s take a closer look at [ClinicsNum] calculation. We have 2 tables: [Trial] and [Clinic].

There is an FK: [Clinic].[TrialID] → [Trial].[TrialID]. Obviously, it«s enough to use COUNT (*) to derive a number of clinics:

SELECT COUNT(*), ...

FROM dbo.Trial

LEFT JOIN dbo.Clinic

ON Trial.ID = Clinic.TrialID

WHERE Trial.Name = @trialName

GROUP BY

...

How can we test such a query? First, let«s use stub FakeTable, which will make our further job a lot easier.

EXEC tSQLt.FakeTable 'dbo.Trial';

EXEC tSQLt.FakeTable 'dbo.Clinic';

FakeTable makes a simple thing — renames old tables and creates new ones with the same name. The same names, the same columns, but without constraints and triggers.

We need this because:

- Test DB can contain some data which can prevent a proper run of the test. FakeTable allows us not to depend on them.

- Usually, we need to fill only a few columns for the test purposes. The table can contain a lot of them, often containing constraints and triggers. We make it easier to insert data later — we will insert only required for the test information, keeping the test as minimalistic as possible.

- There will be no unwanted trigger runs, so, we need not worry about post-effects.

Then we insert required test data:

INSERT INTO dbo.Trial

([ID], [Name])

VALUES

(1, 'Valerian');

INSERT INTO dbo.Clinic

([ID], [TrialID], [Name])

VALUES

(1, 1, 'Clinic1'),

(2, 1, 'Clinic2');

We derive the query from the DB, create [Actual] table and fill it with the result

set from the query.

DECLARE @sqlStatement NVARCHAR(MAX) = (SELECT…

CREATE TABLE actual ([TrialID], ...);

INSERT INTO actual

EXEC sp_executesql @sqlStatement, ...

Now, we fill up [Expected] — our expected values:

CREATE TABLE expected (

ClinicsNum INT

);

INSERT INTO expected SELECT 2

I want to draw your attention that we have only one column in the [Expected] table, though we have the full set in the [Actual] column.

This is due to one useful feature of the AssertEqualsTable procedure which we will use for values verification.

EXEC tSQLt.AssertEqualsTable

'expected',

'actual',

'incorrect number of clinics';

It compares only those columns which are presented in both tables. It«s very convenient in our case because the query under test returns a lot of columns, each connected with pretty complicated logic. We don«t want to inflate test cases, so this feature really helps. Of course, this feature is a double-edged sword. If [Actual] is filled via SELECT TOP 0 and at one point an unexpected column appears, such test case won«t catch this. You must write additional checks for covering this.

AssertEqualsTable twin procedures

It worth mentioning that tSQLt contains 2 procedures like AssertEqualsTable. They are AssertEqualsTableSchema and AssertResultSetsHaveSameMetaData. The first one does the same as AssertEqualsTable but on tables» metadata. The second one does the same but on result sets» metadata.

Example #2: Constraints

The previous example has shown us how we can remove constraints. But what if we need to check them? Technically, constraints are part of logic too, and they can be considered as a candidate for covering by tests.

Consider the situation from the previous example. 2 tables — [Trial] and [Clinic]; [TrialID] FK:

Let«s try writing a test case for checking it. First, like in the previous case, we fake the tables:

EXEC tSQLt.FakeTable '[dbo].[Trial]'

EXEC tSQLt.FakeTable '[dbo].[Clinic]'

The aim is the same — getting rid of unnecessary limits. We want isolated checks without undue effort.

Next, we return the constraint which we want to test using ApplyConstraint:

EXEC tSQLt.ApplyConstraint

'[dbo].[Clinic]',

'Trial_FK';

Now we have a configuration for the check. The check itself is that trying to insert data will cause an exception. For the test case passing we need to catch this exception. Exception handler ExpectException can help.

EXEC tSQLt.ExpectException

@ExpectedMessage = 'The INSERT statement conflicted...',

@ExpectedSeverity = 16,

@ExpectedState = 0;

We can try to insert non-insertable after the handler setting.

INSERT INTO [dbo].[Clinic] ([TrialID])

VALUES (1)

The exception was catched. Test pass.

ApplyConstraint twin procedures

The way of testing triggers proposed by tSQLt authors is similar to testing constraints. We can use ApplyTrigger procedure for returning the trigger to the table. After that, everything goes as in the example above — start the trigger, check the result.

ExpectNoException — the ExpectException«s antonym

There is an ExpectNoException procedure for the cases when exception mustn«t happen. It works the same way as ExpectException works except that the test fails in case of exception happening.

Example #3: Semaphore

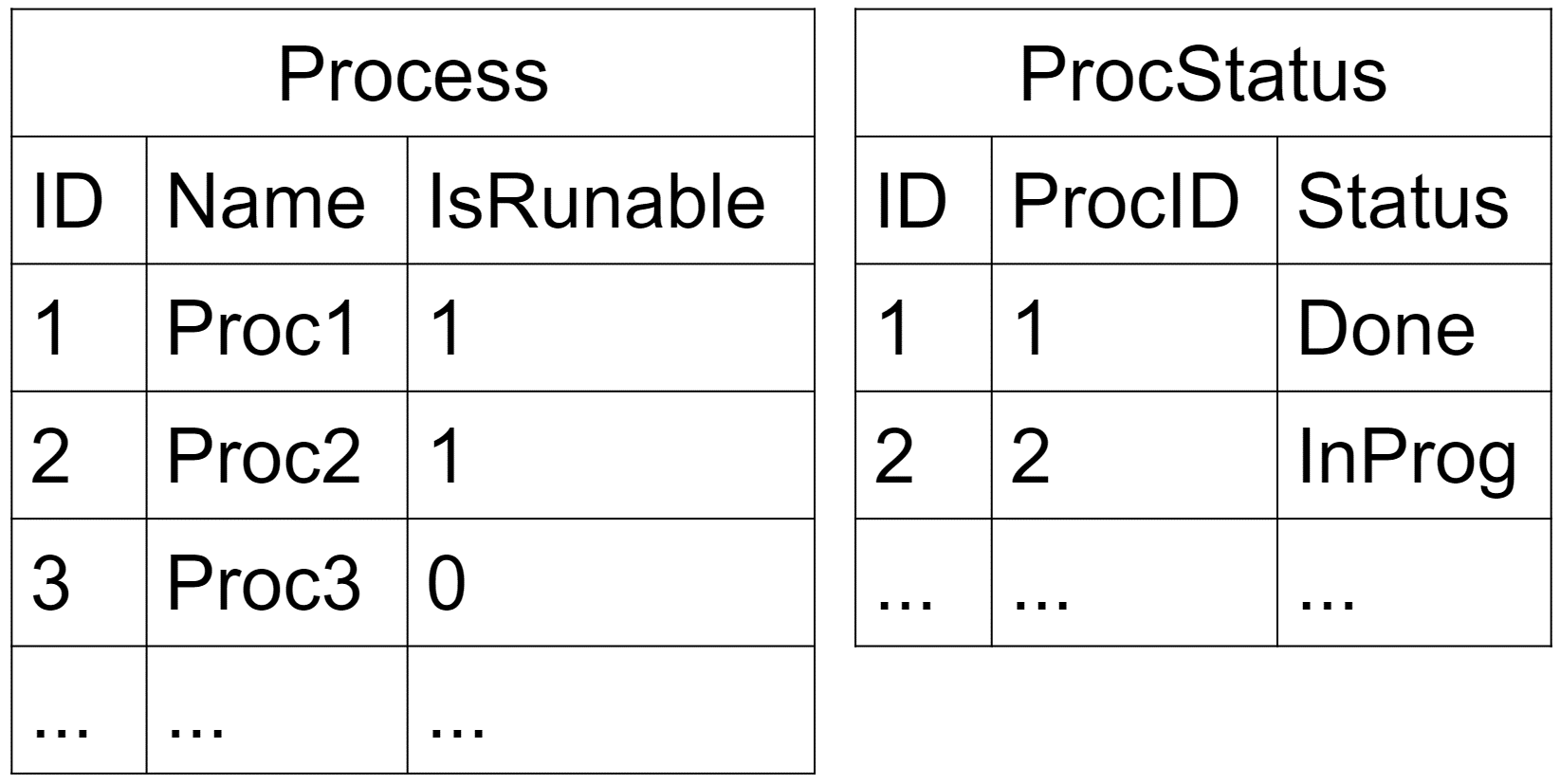

There are some stored procedures and windows services. The start of their execution can be caused by different outer events. However, the order of their execution is fixed. So, it«s required to implement access control on the DB side — i.e. a semaphore. In our case, the semaphore is a group of stored procedures working together.

Let«s look at a procedure within the semaphore. We have 2 tables — [Process] and [ProcStatus]:

The [Process] table contains a list of processes allowed for execution. [ProcStatus], obviously, contains the list of statuses of the process from the previous table.

So, what does our procedure do? First, it does the following checks:

- We have passed a process name as one of the input parameters of the procedure. This name is searched in the [Name] field of the [Process] table.

- If the name of the process has been found, it checks the [IsRunable] flag of the [Process] table.

- If the flag is ON, we consider that the process can run. The last check happens in the [ProcStatus] table. We need to make sure that the process is not currently run, which means the absence of records about the process with «InProg» status in the [ProcStatus] table.

If everything is OK and all checks are passed, we add a new record about our process into the [ProcStatus] table with status «InProg». The ID of this new record is returned with ProcStatusId output parameter.

If something has gone wrong, we expect the following:

- An email to a system administrator is sent.

- ProcStatusId = -1 is returned.

- No new [ProcStatus] records added.

Let«s create a test case for checking the case of process absence in the [Process] table.

We use FakeTable again. It«s not so critical here, but it can be convenient because:

- It«s guaranteed there will be no data which can disrupt the test case execution.

- The further check of new [ProcStatus] records absence will be simplified.

EXEC tSQLt.FakeTable 'dbo.Process';

EXEC tSQLt.FakeTable 'dbo.ProcStatus';

There is a [SendEmail] procedure whose name speaks for itself. We need to catch its call. tSQLt suggests using SpyProcedure mock for that.

EXEC tSQLt.SpyProcedure 'dbo.SendEmail'

SpyProcedure does the following:

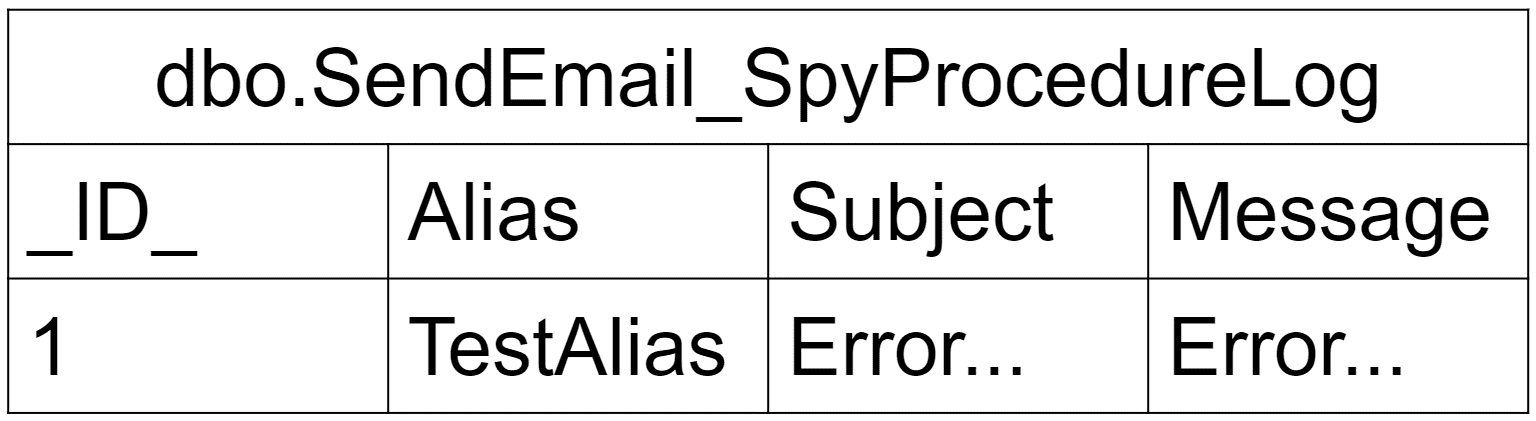

- Creates a table with a name which looks like [dbo].[ProcedureName_SpyProcedureLog]

- Just like FakeTable, replaces the original procedure with an automatically generated one, with the same name, but with logging logic inside. You can also add your own logic to the generated procedure if required.

It«s not difficult to guess that logs are recorded to [dbo].[SendEmail_SpyProcedureLog] table. This table contains an [_ID_] column which is for calls» sequence numbers. Subsequent columns are named after parameters passed to the procedure and used for collecting them, so, the parameters» values can be verified as well.

The last thing we need to do before the semaphore call is to create a variable for storing the [ProcStatusId] value (to be more exact, -1, as the record will not be added).

DECLARE @ProcStatusId BIGINT;

We call the semaphore:

EXEC dbo.[Semaphore_JobStarter]

'SomeProcess',

@ProcStatusId OUTPUT; -- here we get -1

Now we have all the data required for the checks. Let«s start from checking

that the message has been sent.

IF NOT EXISTS (

SELECT *

FROM dbo.SendEmail_SpyProcedureLog)

EXEC tSQLt.Fail 'SendEmail has not been run.';

In this case, we don«t check the passed parameters and test the fact of sending only. I want to draw your attention to the Fail procedure. It allows us to «officially» fail a test case. If you need to build a sophisticated construction, Fail can help.

Now we check the absence of records in the [ProcStatus] table with the AssertEmptyTable procedure.

EXEC tSQLt.AssertEmptyTable 'dbo.ProcStatus';

This is where FakeTable we used in the beginning helped us. With it, we may expect an empty table and test using a single line of code. The right way of checking this without table faking would be to compare the number of rows before and after procedure execution, and that would require more actions.

We can easily check the ProcStatusId = -1 equality with AssertEquals.

EXEC tSQLt.AssertEquals

-1,

@ProcStatusId,

'Wrong ProcStatusId.';

AssertEquals is minimalistic. It just compares 2 values, nothing extraordinary.

AssertEquals twin procedures

We have the following procedures for values comparison:

- AssertEquals

- AssertNotEquals

- AssertEqualsString

- AssertLike

The names are self-explanatory, I think. The only procedure I want to emphasize is AssertEqualsString. It«s the procedure dedicated to string values verification. Why do we need one more procedure, considering given universal AssertEquals? The thing is, AssertEquals/AssertNotEquals/AssertLike work with SQL_VARIANT type. NVARCHAR (MAX) is not included in SQL_VARIANT, so tSQLt developers had to make an additional procedure.

FakeFunction

At a push, we can call FakeFunction a procedure similar to SpyProcedure. This fake allows replacing any function with a simpler one. As SQL Server functions work like a toothpaste tube (the result is returned through the only «hole»), it is technically impossible to implement a logging functionality. Inner logic replacement is the only available way.

I want to tell you about some pitfalls which you can face during tSQLt usage. In this case «pitfalls» mean some issues which are caused by SQL Server restrictions and/or which are impossible to be resolved by framework developers.

Transactions rollback and dooming

The first and the main problem faced by our team is the transactions rollback and dooming. SQL Server can«t rollback nested transaction separately. It always rolls back all the transactions up to the outermost. Considering that tSQLt wraps each test into a separate transaction, it can become a problem because rollback inside a stored procedure can break a test run with a non-descriptive execution error.

As a workaround, we use savepoints. The idea is simple. At the start, we check if we are inside a transaction or not. If yes, we suppose it«s a tSQLt transaction and put a savepoint, so we will roll back to it if necessary. If no, we start a new transaction. In fact, we don«t allow nesting.

The problem is complicated by transaction dooming — it can happen if an exception was thrown. A doomed transaction cannot be committed as well as rolled back to a savepoint, so we must roll it back up to outermost transaction again.

Considering the points described above, we must use the following structure:

DECLARE @isNestedTransaction BIT =

CASE

WHEN @@trancount > 0

THEN 'true'

ELSE 'false'

END;

BEGIN TRY

IF @isNestedTransaction = 'false'

BEGIN TRANSACTION

ELSE

SAVE TRANSACTION SavepointName;

-- something useful

IF @isNestedTransaction = 'false'

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

DECLARE @isCommitable BIT =

CASE WHEN XACT_STATE() = 1

THEN 'true'

ELSE 'false'

END;

IF @isCommitable = 'true' AND @isNestedTransaction = 'true'

ROLLBACK TRANSACTION SavepointName;

ELSE

ROLLBACK;

THROW;

END CATCH;

Let«s review the code piece by piece. First, we need to determine if we are inside a transaction or not.

DECLARE @isNestedTransaction BIT =

CASE WHEN @@trancount > 0

THEN 'true'

ELSE 'false'

END;

After deriving the @isNestedTransaction flag, we can start the TRY-block and set a savepoint or start a transaction depending on the situation.

BEGIN TRY

IF @isNestedTransaction = 'false'

BEGIN TRANSACTION

ELSE

SAVE TRANSACTION SavepointName;

-- something useful

After doing something useful, we commit the results if it«s a «real» procedure run.

-- something useful

IF @isNestedTransaction = 'false'

COMMIT TRANSACTION;

END TRY

Of course, if it«s a test case run, we don«t need to commit anything. tSQLt will roll the changes back in the end automatically.

If something has gone wrong and we get into the CATCH block, we need to determine if the transaction is committable or not.

BEGIN CATCH

DECLARE @isCommitable BIT =

CASE WHEN XACT_STATE() = 1

THEN 'true'

ELSE 'false'

END;

We can roll back to the savepoint only if:

- The transaction is commitable

- It«s a test run, so, savepoint exists.

In all other cases we must roll back the whole transaction.

IF @isCommitable = 'true' AND @isNestedTransaction = 'true'

ROLLBACK TRANSACTION SavepointName;

ELSE

ROLLBACK;

THROW;

END CATCH;

Yes, unfortunately, if we have reached uncommittable transaction state during a test run, we still get the execution error.

FakeTable and the Foreign key issue

Let«s review familiar [Trial] and [Clinic] tables

We remember about [TrialID] FK. What issue can it cause? In the examples above we applied FakeTable on both tables. If we use it on one of them only, we will reach the following setup:

So, an attempt to insert a record into [Clinic] can be failed even if we have prepared data in the fake-version of [Trial].

[dbo].[Test_FK_Problem] failed: (Error) The INSERT statement conflicted with the FOREIGN KEY constraint "Trial_Fk". The conflict occurred in database "HabrDemo", table "dbo.tSQLt_tempobject_ba8f36353f7a44f6a9176a7d1db02493", column 'TrialID'.[16,0]{Test_FK_Problem,14}

Conclusion: fake all or none. In case of none, obviously, you should prepare a DB with all required test data.

SpyProcedure on system procedures

Unfortunately, we can«t spy on system procedures:

[HabrDemo].[test_test] failed: (Error) Cannot use SpyProcedure on sys.sp_help because the procedure does not exist[16,10] {tSQLt.Private_ValidateProcedureCanBeUsedWithSpyProcedure,7}

In the semaphore example, we tracked calls of the [SendEmail] procedure, which was created by our developers. In this case, it was not required by testing only. It was necessary to create a separate procedure because it is needed to prepare some data before sending. But you should be mentally prepared to write an interlayer procedure in order to meet testing aims.

Quick installation

tSQLt installation consists of 2 steps and takes around 2 minutes. You need to activate CLR if it«s not currently active and execute a single SQL script. That«s all: now you can add your first test class and write test cases.

Quick learning

tSQLt is easy to learn. It took a bit more than one workday for me. I asked colleagues and it appears to take around 1 workday for others too. I doubt it can take much more time.

Quick CI integration

It took around 2 hours to setup CI integration on our project. The time can vary, of course, but it«s not a problem in general, and it can be done quickly.

A broad set of instruments

It«s subjective, but in my view the tSQLt functionality is rich and the lion«s share of needs can be covered by it. If it«s not enough, you can always use Fail procedure for rare and sophisticated cases.

Convenient documentation

Official guides are convenient and consistent. You can easily understand tSQLt usage in a short period even if it«s your first unit testing tool.

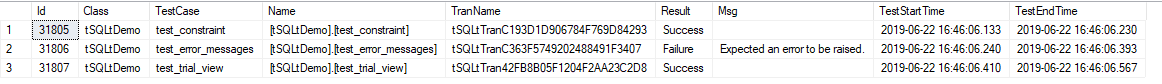

Clear output

Test output can be taken in an illustrative text format:

[tSQLtDemo].[test_error_messages] failed: (Failure) Expected an error to be raised.

[tSQLtDemo].[test_tables_comparison] failed: (Failure) useful and descriptive error message

Unexpected/missing resultset rows!

|_m_|Column1|Column2|

+---+-------+-------+

|< |2 |Value2 |

|= |1 |Value1 |

|= |3 |Value3 |

|> |2 |Value3 |

+----------------------+

|Test Execution Summary|

+----------------------+

|No|Test Case Name |Dur(ms)|Result |

+--+------------------------------------+-------+-------+

|1 |[tSQLtDemo].[test_constraint] | 83|Success|

|2 |[tSQLtDemo].[test_trial_view] | 83|Success|

|3 |[tSQLtDemo].[test_error_messages] | 127|Failure|

|4 |[tSQLtDemo].[test_tables_comparison]| 147|Failure|

-----------------------------------------------------------------------------

Msg 50000, Level 16, State 10, Line 1

Test Case Summary: 4 test case(s) executed, 2 succeeded, 2 failed, 0 errored.

-----------------------------------------------------------------------------

It can also be derived from the DB (clickable)…

…or even as XML.

The last format allows CI integration without any problems. Specifically, we use tSQLt together with Atlassian Bamboo.

Support of RedGate

As one of the pros, I can name the support of one of the largest DBA tools providers — RedGate. Their SQL Server Management Studio plugin named SQL Test works with tSQLt from start. Moreover, RedGate helps the main developer of tSQLt with dev-environment, according to his words in Google groups.

No temporary tables faking

tSQLt doesn«t allow faking temporary tables. Though, in case of necessity, you can use an unofficial addon. Unfortunately, this addon works with SQL Server 2016+ only.

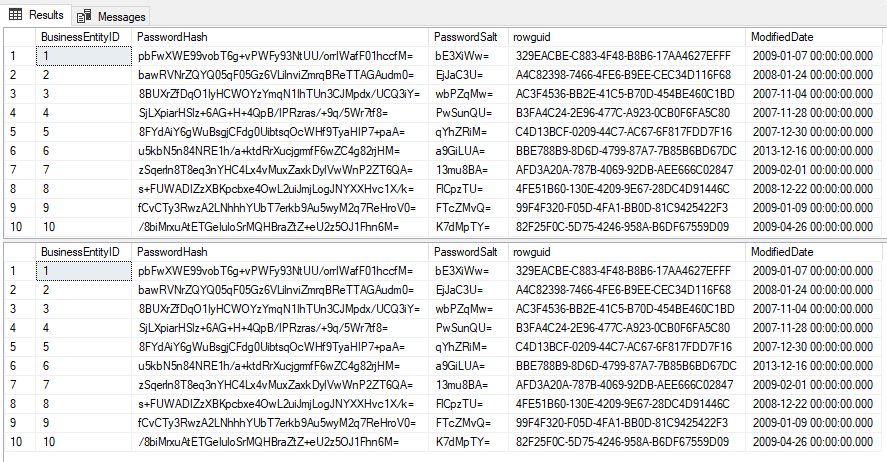

Work with outer DBs

tSQLt is designed to work with the code in the same DB in which the framework is installed. Thus, it can be impossible to use it with an outer DB. At least, fakes won«t work.

CREATE PROCEDURE [tSQLtDemo].[test_outer_db]

AS

BEGIN

SELECT TOP 10 * FROM [AdventureWorks2017].[Person].[Password]

EXEC tSQLt.FakeTable '[AdventureWorks2017].[Person].[Password]'

SELECT TOP 10 * FROM [AdventureWorks2017].[Person].[Password]

END

It looks like assertions work, but their workability is not guaranteed, of course.

CREATE PROCEDURE [tSQLtDemo].[test_outer_db_assertions]

AS

BEGIN

SELECT TOP 1 *

INTO #Actual

FROM [AdventureWorks2017].[Person].[Password]

SELECT *

INTO #Expected

FROM (

SELECT 'bE3XiWw=' AS [PasswordSalt]

) expectedresult;

EXEC tSQLt.AssertEqualsTable '#Expected', '#Actual', 'The salt is not salty';

END

Documentation bugs

Despite I mentioned above that the guides are convenient and consistent, the documentation has some issues. It contains outdated parts.

Example 1. «Quick start guide» suggests downloading the framework from SourceForge.

They moved from SourceForge as far as in 2015.

Example 2. ApplyConstraint guide utilizes bulky design with the Fail procedure inside an exception catching example. This can be replaced with simple and clear code using ExpectException.

CREATE PROCEDURE ConstraintTests.[test ReferencingTable_ReferencedTable_FK prevents insert of orphaned rows]

AS

BEGIN

EXEC tSQLt.FakeTable 'dbo.ReferencedTable';

EXEC tSQLt.FakeTable 'dbo.ReferencingTable';

EXEC tSQLt.ApplyConstraint 'dbo.ReferencingTable','ReferencingTable_ReferencedTable_FK';

DECLARE @ErrorMessage NVARCHAR(MAX); SET @ErrorMessage = '';

/* [NB] Why don’t we use ExceptException below? */

BEGIN TRY

INSERT INTO dbo.ReferencingTable

( id, ReferencedTableId )

VALUES ( 1, 11 ) ;

END TRY

BEGIN CATCH

SET @ErrorMessage = ERROR_MESSAGE();

END CATCH

IF @ErrorMessage NOT LIKE '%ReferencingTable_ReferencedTable_FK%'

BEGIN

EXEC tSQLt.Fail 'Expected error message containing ''ReferencingTable_ReferencedTable_FK'' but got: ''',@ErrorMessage,'''!';

END

END

GO

And this is expected, because of…

Partial abandonment

There was a prolonged pause in development from the beginning of 2016 until June 2019. Yes, unfortunately, this tool is partially abandoned. The development has slowly started in 2019, according to GitHub. Though official Google Groups have a thread where Sebastian, the main tSQLt developer, was asked about the future of the project. The last question was asked on 2nd March 2019, with no answer.

SQL Server 2017 issue

tSQLt installation may require some additional actions if you«re using SQL Server 2017. Microsoft implemented the first security change since 2012 in this release. «CLR strict security» server-level flag has been added. This flag disallows creation of unsigned assemblies» (even SAFE). Detailed description deserves a separate article (and, fortunately, we already have a good one; see also following articles in the sequence. Just be mentally prepared for this.

Of course, I could attribute this issue to the «pitfalls», but this issue can be resolved by the tSQLt developers. GitHub issue has already been risen. Still, it has not been resolved since October 2017.

tSQLt is not one of a kind. Though you can«t use it in other DBMS because of CLR and T-SQL nuances, you still can find something similar. It is worth mentioning that these alternatives are not very close to tSQLt, so I mean SQL-powered approach.

For example, PostgreSQL users can try pgTAP. It«s a well-developed and actively developing tool using native PL/pgSQL for tests and TAP output format. The similar tool MyTap can help you with tests under MySQL. This framework is a bit less functional than pgTAP but still can be useful. And it«s in active development too. If you«re a happy Oracle user, you have an opportunity to use very powerful tool utPLSQL. It is developing very actively and provides a big number of features.

I wanted to convey 2 ideas:

The first: the usefulness of DB code testing. It«s not important if you«re using SQL Server, Oracle, MySQL, or something else. If your DB contains untested logic, you«re taking risks. As all other bugs in all other code, DB code bugs can damage the product and the company providing it.

The second: the tool choice. For those who work with SQL Server, tSQLt, even if it is not a 100% winner, certainly deserves attention. Despite slow development and some issues, it«s still a practical framework that could make your job a lot easier.