How to receive data from Google Analytics using R in Microsoft SQL Server

In this article I want to show in detail how you can use R in Microsoft SQL Server to get data from Google Analytics (and generally from any API).

The task — we have MS SQL server and we want to receive data in DWH by API

We will use googleAnalyticsR package to connect to Google Analytics (GA).

This package is chosen as an example due to its popularity. You can use another package, for example: RGoogleAnalytic.

Approaches to problem solving will be the same.

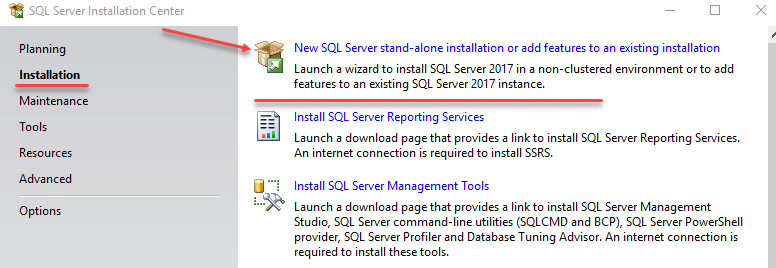

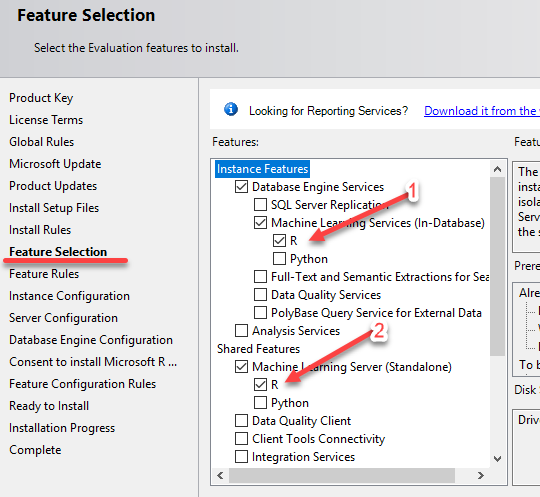

Install R on MS SQL Server

this is done via the standard interface for installing MS SQL components.

- This is R that SQL Server will interact with directly (called in SQL queries).

- You can work with the R client copy from R Studio without fear of breaking something on the database server.

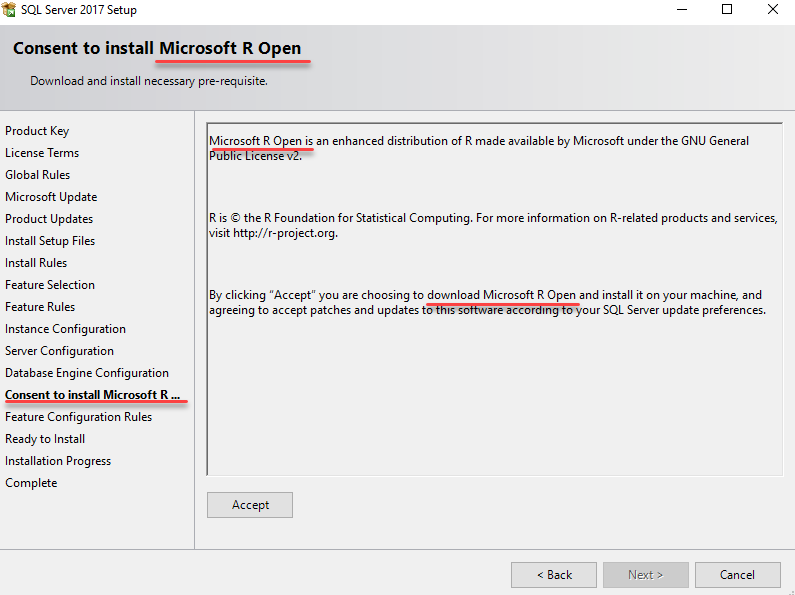

Accept the license agreement and pay attention that not оrdinary R will be installed but Microsoft R Open

Briefly, what it is:

Microsoft takes R Open, improves it with its packages and distributes for free.

Accordingly, packages of this R version are available for download not in CRAN, but in MRAN.

There is more to come. In fact, when installing MS SQL, we get not a clean MRAN, but something more — Microsoft ML Server.

This means to us that there will be additional packages in the set of R libraries — RevoScaleR.

RevoScaleR is designed for processing big data and building machine learning models on large datasets.

This information should be kept in mind since there is a high probability of questions related to different R versions.

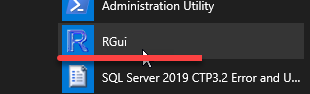

After installing the components, we get the Microsoft R interaction.

This console is not very convenient to use, so immediately download and install the free version RStudio.

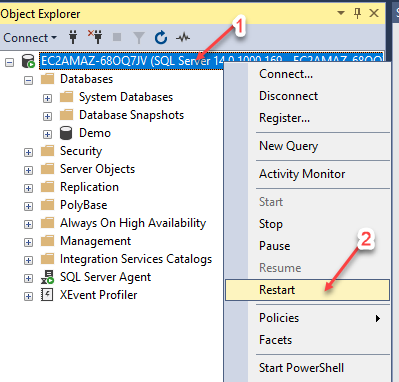

Configure SQL server to interact with R

Execute the following scripts in SSMS:

Allow scripts to run on SQL server

sp_configure 'external scripts enabled', 1;

RECONFIGURE WITH OVERRIDE; Server SQL restart

Make sure R scripts are executed

EXECUTE sp_execute_external_script

@language =N'R',

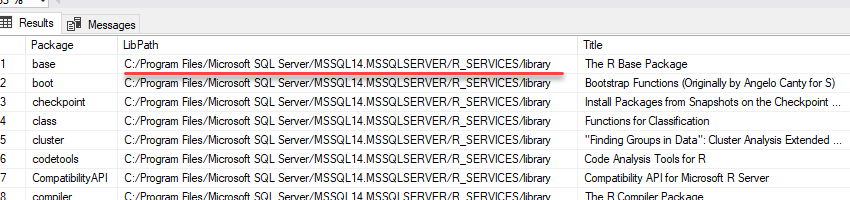

@script=N'print(version)';Find the location of R packages that are used by SQL server

declare @Rscript nvarchar(max)

set @Rscript = N'

InstaledLibrary <- library()

InstaledLibrary <- as.data.frame(InstaledLibrary$results )

OutputDataSet <- InstaledLibrary

'

EXECUTE sp_execute_external_script

@language = N'R'

, @script = @Rscript

WITH RESULT SETS (([Package] varchar(255) NOT NULL,

[LibPath] varchar(255) NOT NULL,

[Title] varchar(255) NOT NULL));

In my case, the path to R MS SQL packages:

C:/Program Files/Microsoft SQL Server/MSSQL14.MSSQLSERVER/R_SERVICES/library

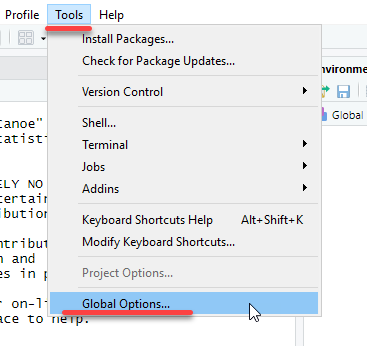

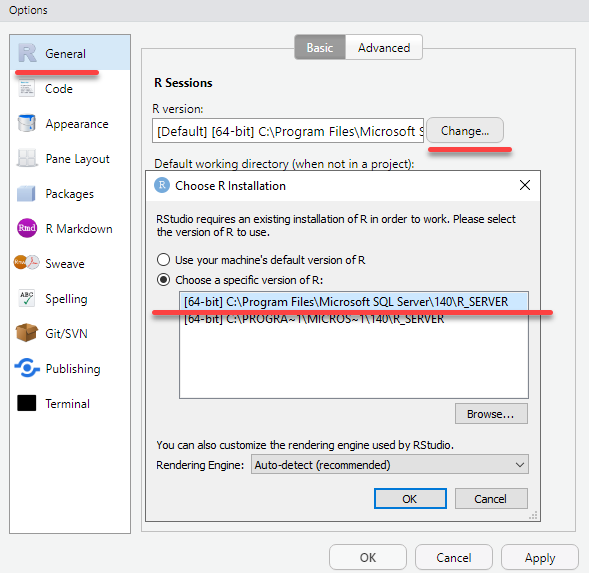

Run RStudio.

There is a good chance that there will be several R versions installed on the computer, so you need to make sure that we are working with the version of SQL server.

Settings will be applied after RStudio restart.

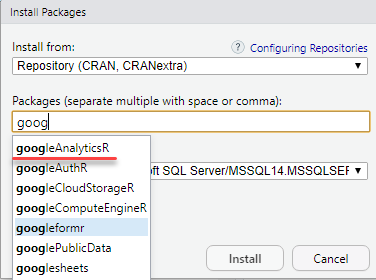

Install the googleAnalyticsR package

To RStudio using the command

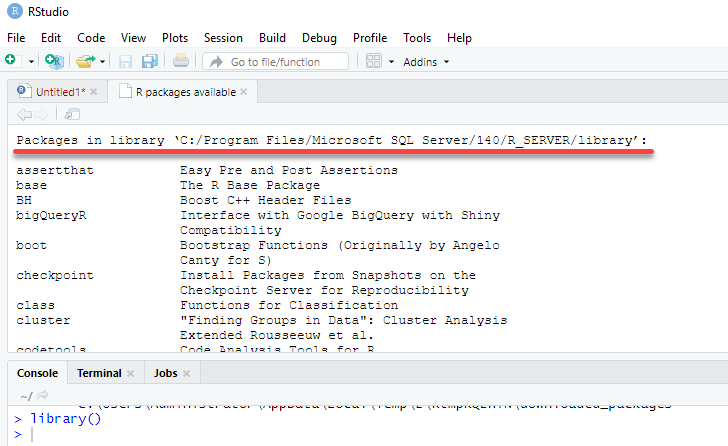

library()Find out the path to the package library of the R client version (with which RStudio works)

In my case, this path:

C:/Program Files/Microsoft SQL Server/140/R_SERVER/library

Install the googleAnalyticsR package via RStudio

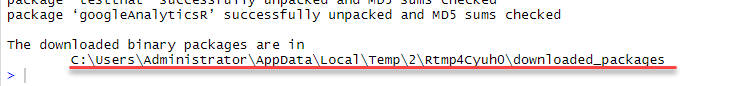

Here is some shaded nuance:

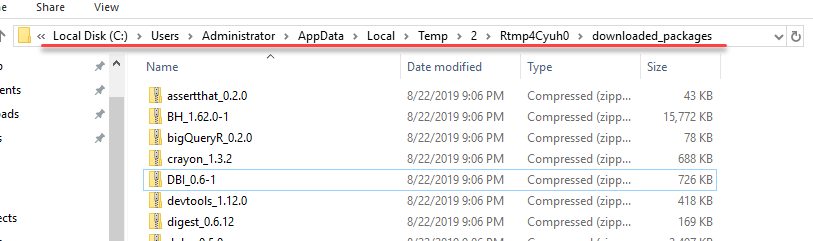

You can«t just add anything you want to the MS SQL system folders. Packages will be saved in a temporary directory as ZIP archives.

Go to the temporary folder and unzip all the packages in Explorer.

Unzipped packages must be copied to the R Services library directory (which MS SQL server works with).

In my example this is the folder

C:/Program Files/Microsoft SQL Server/MSSQL14.MSSQLSERVER/R_SERVICES/library

Unzipped packages must also be copied to the R client version (which RStudio works with)

In my example this is the folder

C:/Program Files/Microsoft SQL Server/140/R_SERVER/library

(we learned these paths from previously executed scripts)

Before copying to the R Services folder, it is better to save a copy of the library folder. Experience has proven that there are different situations and it is better to be able to return to existing packages.

When copying, replace all existing packages.

To consolidate the skill, repeat the exercise.

Only now we do not install packages, but update all existing ones.

(this is not necessary for connecting to GA, but it is better to have the latest versions of all packages)

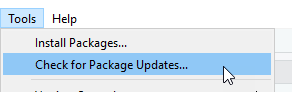

Check for new packages in RStudio

Packages will be downloaded to a temporary folder.

Perform the same actions as when installing new packages.

Checking MS SQL Internet access

declare @Rscript nvarchar(max)

set @Rscript = N'

library(httr)

HEAD("https://www.yandex.ru", verbose())

'

EXECUTE sp_execute_external_script

@language = N'R'

, @script = @RscriptSince SQL Server does not have Internet access by default, it is likely that the previous script will cause the following error.

Provide Internet access to R scripts from SQL.

SQL 2017

SQL 2019

In SSMS

-- Создаем базу данных для примера

create database Demo

go

use Demo

go

-- Создаем схему, для объектов базы данных связанных с Google Analytics

create schema GA

go

-- Создаем таблицу для сохранения токена доступа к GA

drop table if exists [GA].[token]

create table [GA].[token](

[id] varchar(200) not null,

[value] varbinary(max)

constraint unique_id unique (id))Get Google Analytics token

Execute the following code in RStudio:

This will open the Google services authentication window in your browser. You will need to log in and give permission to access Google Analytics.

# На всякий случай укажем тайм зону

Sys.setenv(TZ="Europe/Berlin")

library(googleAnalyticsR)

# Получаем токен

ga_auth()

PathTokenFile <- paste ( getwd(),"/", ".httr-oauth" , sep="")

TokenFile <- readBin(PathTokenFile, "raw", file.info(PathTokenFile)$size)

# Создали подключение к базе

conStr <- 'Driver={SQL Server};Server=EC2AMAZ-68OQ7JV;Database=Demo;Uid=Sa;Pwd=SaSql123'

ds <- RxOdbcData(table="ga.token", connectionString=conStr)

# Записываем токен в базу

rxWriteObject(ds, "ga_TokenFile", TokenFile)In SSMS, make sure that the token from Google is received and recorded in the database

Select * from [GA].[token]Check connection to GA via RStudio

# Проверяем подключение В RStudio

Sys.setenv(TZ="Europe/Berlin")

library(googleAnalyticsR)

# Получаем токен из базы

conStr <- 'Driver={SQL Server};Server=EC2AMAZ-68OQ7JV;Database=Demo;Uid=Sa;Pwd=SaSql123' # Аутентификация в базе по пользователю

ds <- RxOdbcData(table="ga.token", connectionString=conStr)

PathTokenFile <- paste ( getwd(),"/", ".httr-oauth" , sep="")

TokenFile <- rxReadObject(ds, "ga_TokenFile")

write.filename = file(PathTokenFile, "wb")

writeBin(TokenFile, write.filename)

close(write.filename)

Sys.setenv("GA_AUTH_FILE" = PathTokenFile)

# Прошли аутентификацию

ga_auth()

# определили ga_id

account_list <- ga_account_list()

ga_id <- account_list$viewId

# Сохранили результат запроса

OutputDataSet <-google_analytics(ga_id,

start="2019-01-01",

end="2019-08-01",

metrics = "sessions",

dimensions = "date")

OutputDataSetIf everything went well, add R script to SQL and execute the query.

drop table if exists #GA_session

create table #GA_session

(

[date] date,

[sessions] int

)

declare @Rscript nvarchar(max)

set @Rscript = N'

Sys.setenv(TZ="Europe/Berlin")

library(googleAnalyticsR)

# Получаем токен из базы

conStr <- ''Driver={SQL Server};Server=EC2AMAZ-68OQ7JV;Database=Demo;Uid=Sa;Pwd=SaSql123'' # Аутентификация в базе по пользователю

ds <- RxOdbcData(table="ga.token", connectionString=conStr)

PathTokenFile <- paste ( getwd(),"/", ".httr-oauth" , sep="")

TokenFile <- rxReadObject(ds, "ga_TokenFile")

write.filename = file(PathTokenFile, "wb")

writeBin(TokenFile, write.filename)

close(write.filename)

Sys.setenv("GA_AUTH_FILE" = PathTokenFile)

# Прошли аутентификацию

ga_auth()

# определили ga_id

account_list <- ga_account_list()

ga_id <- account_list$viewId

# Сохранили результат запроса

OutputDataSet <-google_analytics(ga_id,

start="2019-01-01",

end="2019-08-01",

metrics = "sessions",

dimensions = "date")

OutputDataSet$date <- as.character(OutputDataSet$date)

'

-- print @Rscript

insert into #GA_session ([date],[sessions])

EXECUTE sp_execute_external_script

@language = N'R',

@script = @Rscript

Select *

from #GA_session

order by [date] ascPay attention that the script uses a Username and Password, which is a good thing.

Therefore, we change the connection string to Windows authentication.

conStr <- ''Driver={SQL Server};Server=EC2AMAZ-68OQ7JV;Database=Demo;Trusted_Connection=true'' # Виндовс аутентификация <<<=== Лучше выбирать этот вариант – хранить пароли в скриптах неправильноAfter changing the authentication method, you will need to add database access rights to the service calling R.

(Of course, it is better to work with user groups. I simplified the solution as part of the demonstration)

We execute the SQL query as a procedure.

Create procedure Ga.Get_session

@Date_start date ='2019-01-01',

@Date_End date ='2019-08-01'

as

drop table if exists #GA_session

create table #GA_session

(

[date] date,

[sessions] int

)

declare @Rscript nvarchar(max)

set @Rscript =CONCAT( N'

# Проверяем подключение В RStudio

Sys.setenv(TZ="Europe/Berlin")

library(googleAnalyticsR)

# Получаем токен из базы

conStr <- ''Driver={SQL Server};Server=EC2AMAZ-68OQ7JV;Database=Demo;Trusted_Connection=true'' # Виндовс аутентификация <<<=== Лучше выбирать этот вариант - никто пароля неувидит

ds <- RxOdbcData(table="ga.token", connectionString=conStr)

PathTokenFile <- paste ( getwd(),"/", ".httr-oauth" , sep="")

TokenFile <- rxReadObject(ds, "ga_TokenFile")

write.filename = file(PathTokenFile, "wb")

writeBin(TokenFile, write.filename)

close(write.filename)

Sys.setenv("GA_AUTH_FILE" = PathTokenFile)

# Прошли аутентификацию

ga_auth()

# определили ga_id

account_list <- ga_account_list()

ga_id <- account_list$viewId

# Сохранили результат запроса

OutputDataSet <-google_analytics(ga_id,

start="' , @Date_start ,N'",

end="' , @Date_End ,N'",

metrics = "sessions",

dimensions = "date")

OutputDataSet$date <- as.character(OutputDataSet$date)

'

)

-- print @Rscript

insert into #GA_session ([date],[sessions])

EXECUTE sp_execute_external_script

@language = N'R',

@script = @Rscript

Select *

from #GA_session

order by [date] ascCheck the procedure operation

-- Default options

exec Ga.Get_session

-- Get sessions for a given period

exec Ga.Get_session

@Date_start ='2019-08-01',

@Date_End ='2019-09-01'R script is not complicated, it can always be copied to R Studio. Modify and save in SQL procedure.

For example, I only changed the dimensions parameter and now can load Landing Page by dates.

Create procedure [GA].[Get_landingPage_session]

@Date_start date ='2019-01-01',

@Date_End date ='2019-08-01'

as

drop table if exists #GA_session

create table #GA_session

(

[date] date,

landingPagePath nvarchar(max),

[sessions] int

)

declare @Rscript nvarchar(max)

set @Rscript =CONCAT( N'

# Проверяем подключение В RStudio

Sys.setenv(TZ="Europe/Berlin")

library(googleAnalyticsR)

# Получаем токен из базы

conStr <- ''Driver={SQL Server};Server=EC2AMAZ-68OQ7JV;Database=Demo;Trusted_Connection=true'' # Виндовс аутентификация <<<=== Лучше выбирать этот вариант - никто пароля неувидит

ds <- RxOdbcData(table="ga.token", connectionString=conStr)

PathTokenFile <- paste ( getwd(),"/", ".httr-oauth" , sep="")

TokenFile <- rxReadObject(ds, "ga_TokenFile")

write.filename = file(PathTokenFile, "wb")

writeBin(TokenFile, write.filename)

close(write.filename)

Sys.setenv("GA_AUTH_FILE" = PathTokenFile)

# Прошли аутентификацию

ga_auth()

# определили ga_id

account_list <- ga_account_list()

ga_id <- account_list$viewId

# Сохранили результат запроса

OutputDataSet <-google_analytics(ga_id,

start="' , @Date_start ,N'",

end="' , @Date_End ,N'",

metrics = "sessions",

dimensions = c("date" ,"landingPagePath"))

OutputDataSet$date <- as.character(OutputDataSet$date)

'

)

-- print @Rscript

insert into #GA_session ([date],landingPagePath,[sessions])

EXECUTE sp_execute_external_script

@language = N'R',

@script = @Rscript

Select *

from #GA_session

order by [date] ascchecking

exec [GA].[Get_landingPage_session]Basically, that«s it.

I would like to note that using R via SQL, you can get data from any API.

For example: receiving exchange rates.

-- https://www.cbr-xml-daily.ru

Declare @script nvarchar(max)

set @script = N'

encoding = "utf-8"

Sys.setlocale("LC_CTYPE", "russian")

Sys.setenv(TZ="Europe/Berlin")

library(httr)

url <- "https://www.cbr-xml-daily.ru/daily_json.js"

resp <- GET(url)

library(jsonlite)

Response <- fromJSON(content(resp, as = "text"))

OutputDataSet <- data.frame(matrix(unlist(Response$Valute$USD), nrow=1, byrow=T),stringsAsFactors=FALSE)

OutputDataSet <- rbind(OutputDataSet,data.frame(matrix(unlist(Response$Valute$EUR), nrow=1, byrow=T),stringsAsFactors=FALSE))

'

EXEC sp_execute_external_script

@language = N'R'

, @script = @script

with result SETS UNDEFINED or obtaining data from the first available API, some farms in Australia…

-- https://dev.socrata.com/

Declare @script nvarchar(max)

set @script = N'

library(httr)

url <- "https://data.ct.gov/resource/y6p2-px98.json?category=Fruit&item=Peaches"

resp <- GET(url)

library(jsonlite)

Response <- fromJSON(content(resp, as = "text"))

OutputDataSet <- as.data.frame(Response)

OutputDataSet <- OutputDataSet [,

c("category" ,

"item" ,

"farmer_id" ,

"zipcode" ,

"business" ,

"l" ,

"location_1_location",

"location_1_city" ,

"location_1_state" ,

"farm_name",

"phone1" ,

"website",

"suite")]

'

EXEC sp_execute_external_script

@language = N'R'

, @script = @script

with result SETS UNDEFINED In total:

● connection passwords are not stored anywhere

● rights are distributed centrally through active directory accounts

● no additional configuration files

● no Python fiddles containing passwords to the database

● all code is stored in the procedures and saved when the database is backed up

MS SQL 2017 database backup with full code is available here

(for playback, you need to install packages, distribute the rights, specify the name of your server)