Tarantool Kubernetes Operator

Kubernetes has already become a de-facto standard for running stateless applications, mainly because it can reduce time-to-market for new features. Launching stateful applications, such as databases or stateful microservices, is still a complex task, but companies have to meet the competition and maintain a high delivery rate. So they create a demand for such solutions.

We want to introduce our solution for launching stateful Tarantool Cartridge clusters: Tarantool Kubernetes Operator, more under the cut.

- Instead of a Thousand Words

- What the Operator Actually Does

- A Little About the Details

- How the Operator Works

- What the Operator Creates

- Summary

Tarantool is an open-source DBMS and an application server all-in-one. As a database, it has many unique characteristics: high efficiency of hardware utilization, flexible data schema, support for both in-memory and disk storage, and the possibility of extension using Lua language. As an application server, it allows you to move the application code as close to the data as possible with minimum response time and maximum throughput. Moreover, Tarantool has an extensive ecosystem providing ready-to-use modules for solving application problems: sharding, queue, modules for easy development (cartridge, luatest), solutions for operation (metrics, ansible), just to name a few.

For all its merits, the capabilities of a single Tarantool instance are always limited. You would have to create tens and hundreds of instances in order to store terabytes of data and process millions of requests, which already implies a distributed system with all its typical problems. To solve them, we have Tarantool Cartridge, which is a framework designed to hide all sorts of difficulties when writing distributed applications. It allows developers to concentrate on the business value of the application. Cartridge provides a robust set of components for automatic cluster orchestration, automatic data distribution, WebUI for operation, and developer tools.

Tarantool is not only about technologies, but also about a team of engineers working on the development of turnkey enterprise systems, out-of-the-box solutions, and support for open-source components.

Globally, all our tasks can be divided into two areas: the development of new systems and the improvement of existing solutions. For example, there is an extensive database from a well-known vendor. To scale it for reading, Tarantool-based eventually consistent cache is placed behind it. Or vice versa: to scale the writing, Tarantool is installed in the hot/cold configuration: while the data is «cooling down», it is dumped to the cold storage and at the same time into the analytics queue. Or a light version of an existing system is written (functional backup) to back up the «hot» data by using data replication from the main system. Learn more from the T+ 2019 conference reports.

All of these systems have one thing in common: they are somewhat difficult to operate. Well, there are many exciting things: to quickly create a cluster of 100+ instances backing up in 3 data centers; to update the application that stores data with no downtime or maintenance drawdowns; to create a backup and restore in order to prepare for a possible accident or human mistakes; to ensure hidden component failover; to organize configuration management…

Tarantool Cartridge that literally has just been released into open source considerably simplifies the distributed system development: it supports component clustering, service discovery, configuration management, instance failure detection and automatic failover, replication topology management, and sharding components.

It would be so great if we could operate all of this as quickly as develop it. Kubernetes makes it possible, but a specialized operator would make life even more comfortable.

Today we introduce the alpha version of Tarantool Kubernetes Operator.

Instead of a Thousand Words

We have prepared a small example based on Tarantool Cartridge, and we are going to work with it. It is a simple application called a distributed key-value storage with HTTP-interface. After start-up, we have the following:

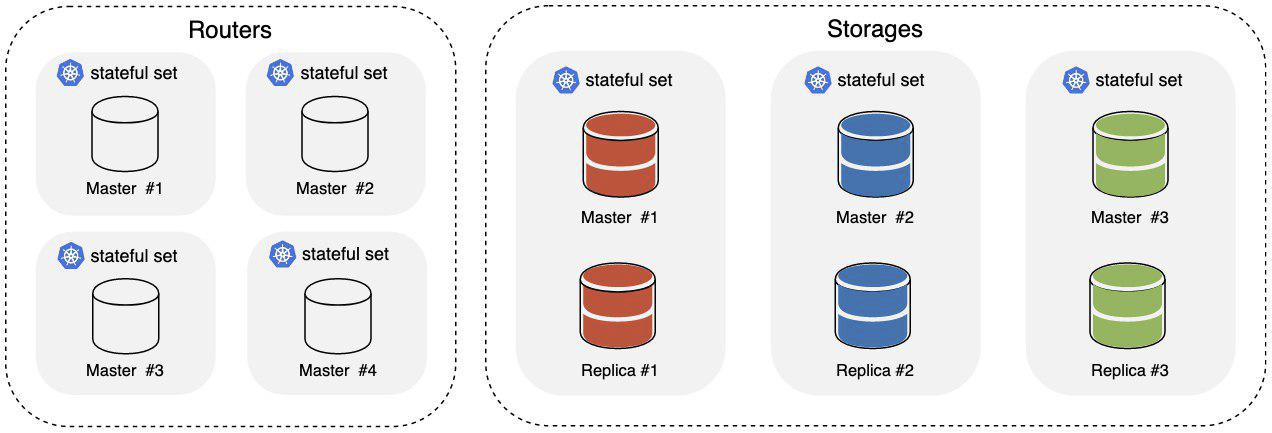

Where

- Routers are part of the cluster responsible for accepting and processing incoming HTTP requests;

- Storages are part of the cluster responsible for storing and processing data; three shards are installed out of the box, each one having a master and a replica.

To balance incoming HTTP traffic on the routers, a Kubernetes Ingress is used. The data is distributed in the storage at the level of Tarantool itself using the vshard component.

We need Kubernetes 1.14+, but minikube will do. It is also lovely to have kubectl. To start the operator, create a ServiceAccount, a Role, and a RoleBinding:

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/service_account.yaml

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/role.yaml

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/role_binding.yaml

Tarantool Operator extends Kubernetes API with its resource definitions, so let«s create them:

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/crds/tarantool_v1alpha1_cluster_crd.yaml

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/crds/tarantool_v1alpha1_role_crd.yaml

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/crds/tarantool_v1alpha1_replicasettemplate_crd.yaml

Everything is ready to start the operator, so here it goes:

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/deploy/operator.yaml

We are waiting for the operator to start, and then we can proceed with starting the application:

$ kubectl create -f https://raw.githubusercontent.com/tarantool/tarantool-operator/0.0.1/examples/kv/deployment.yaml

An Ingress is declared on the web UI in the YAML file with the example; it is available in cluster_ip/admin/cluster. When at least one Ingress Pod is ready and running, you can go there to watch how new instances are added to the cluster and how its topology changes.

We are waiting for the cluster to be used:

$ kubectl describe clusters.tarantool.io examples-kv-cluster

We are waiting for the following cluster Status:

…

Status:

State: Ready

…

That is all, and the application is ready to use!

Do you need more storage space? Then, let«s add some shards:

$ kubectl scale roles.tarantool.io storage --replicas=3

If shards cannot handle the load, then let«s increase the number of instances in the shard by editing the replica set template:

$ kubectl edit replicasettemplates.tarantool.io storage-template

Let us set the .spec.replicas value to two in order to increase the number of instances in each replica set to two.

If a cluster is no longer needed, just delete it together with all the resources:

$ kubectl delete clusters.tarantool.io examples-kv-cluster

Did something go wrong? Create a ticket, and we will quickly work on it.

What the Operator Actually Does

The start-up and operation of the Tarantool Cartridge cluster is a story of performing specific actions in a specific order at a specific time.

The cluster itself is managed primarily via the admin API: GraphQL over HTTP. You can undoubtedly go a level lower and give commands directly through the console, but this doesn’t happen very often.

For example, this is how the cluster starts:

- We deploy the required number of Tarantool instances, for example, using systemd.

- Then we connect the instances into membership:

mutation { probe_instance: probe_server(uri: "storage:3301") } - Then we assign the roles to the instances and specify the instance and replica set identifiers. The GraphQL API is used for this purpose:

mutation { join_server( uri:"storage:3301", instance_uuid: "cccccccc-cccc-4000-b000-000000000001", replicaset_uuid: "cccccccc-0000-4000-b000-000000000000", roles: ["storage"], timeout: 5 ) } - inally, we bootstrap the component responsible for sharding using the API:

mutation { bootstrap_vshard cluster { failover(enabled:true) } }

Easy, right?

Everything is more interesting when it comes to cluster expansion. The Routers role from the example scales easily: create more instances, join them to an existing cluster, and you’re done! The Storages role is somewhat trickier. The storage is sharded, so when adding/removing instances, it is necessary to rebalance the data by moving it to/from the new/deleted instances respectively. Failing to do so would result in either underloaded instances, or lost data. What if there is not just one, but a dozen of clusters with different topologies?

In general, this is all that Tarantool Operator handles. The user describes the necessary state of the Tarantool Cartridge cluster, and the operator translates it into a set of actions applied to the K8s resources and into certain calls to the Tarantool cluster administrator API in a specific order at a specific time. It also tries to hide all the details from the user.

A Little About the Details

While working with the Tarantool Cartridge cluster administrator API, both the order of the calls and their destination are essential. Why is that?

Tarantool Cartridge contains its topology storage, service discovery component and configuration component. Each instance of the cluster stores a copy of the topology and configuration in a YAML file.

servers:

d8a9ce19-a880-5757-9ae0-6a0959525842:

uri: storage-2-0.examples-kv-cluster:3301

replicaset_uuid: 8cf044f2-cae0-519b-8d08-00a2f1173fcb

497762e2-02a1-583e-8f51-5610375ebae9:

uri: storage-0-0.examples-kv-cluster:3301

replicaset_uuid: 05e42b64-fa81-59e6-beb2-95d84c22a435

…

vshard:

bucket_count: 30000

...

Updates are applied consistently using the two-phase commit mechanism. A successful update requires a 100% quorum: every instance must respond. Оtherwise, it rolls back. What does this mean in terms of operation? In terms of reliability, all the requests to the administrator API that modify the cluster state should be sent to a single instance, or the leader, because otherwise we risk getting different configurations on different instances. Tarantool Cartridge does not know how to do a leader election (not just yet), but Tarantool Operator can, and for you, this is just a fun fact, because the operator does everything.

Every instance should also have a fixed identity, i.e. a set of instance_uuid and replicaset_uuid, as well as advertise_uri. If suddenly a storage restarts, and one of these parameters changes, then you run the risk of breaking the quorum, and the operator is responsible for this.

How the Operator Works

The purpose of the operator is to bring the system into the user-defined state and maintain the system in this state until new directions are given. In order for the operator to be able to work, it needs:

- The description of the system status.

- The code that would bring the system into this state.

- A mechanism for integrating this code into k8s (for example, to receive state change notifications).

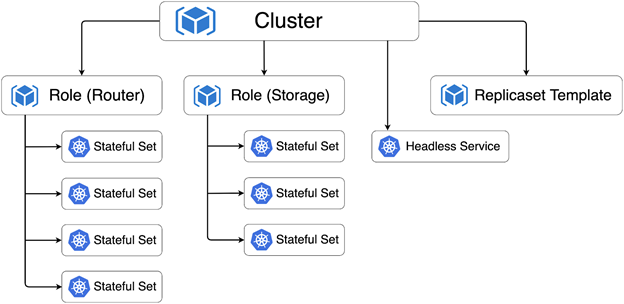

The Tarantool Cartridge cluster is described in terms of k8s using a Custom Resource Definition (CRD). The operator would need three custom resources united under the tarantool.io/v1alpha group:

- Cluster is a top-level resource that corresponds to a single Tarantool Cartridge cluster.

- Role is a user role in terms of Tarantool Cartridge.

- Replicaset Template is a template for creating StatefulSets (I will tell you a bit later why they are stateful; not to be confused with K8s ReplicaSet).

All of these resources directly reflect the Tarantool Cartridge cluster description model. Having a common dictionary makes it easier to communicate with the developers and to understand what they would like to see in production.

The code that brings the system to the given state is the Controller in terms of K8s. In case of Tarantool Operator, there are several controllers:

- Cluster Controller is responsible for interacting with the Tarantool Cartridge cluster; it connects instances to the cluster and disconnects instances from the cluster.

- Role Controller is the user role controller responsible for creating StatefulSets from the template and maintaining the predefined number of them.

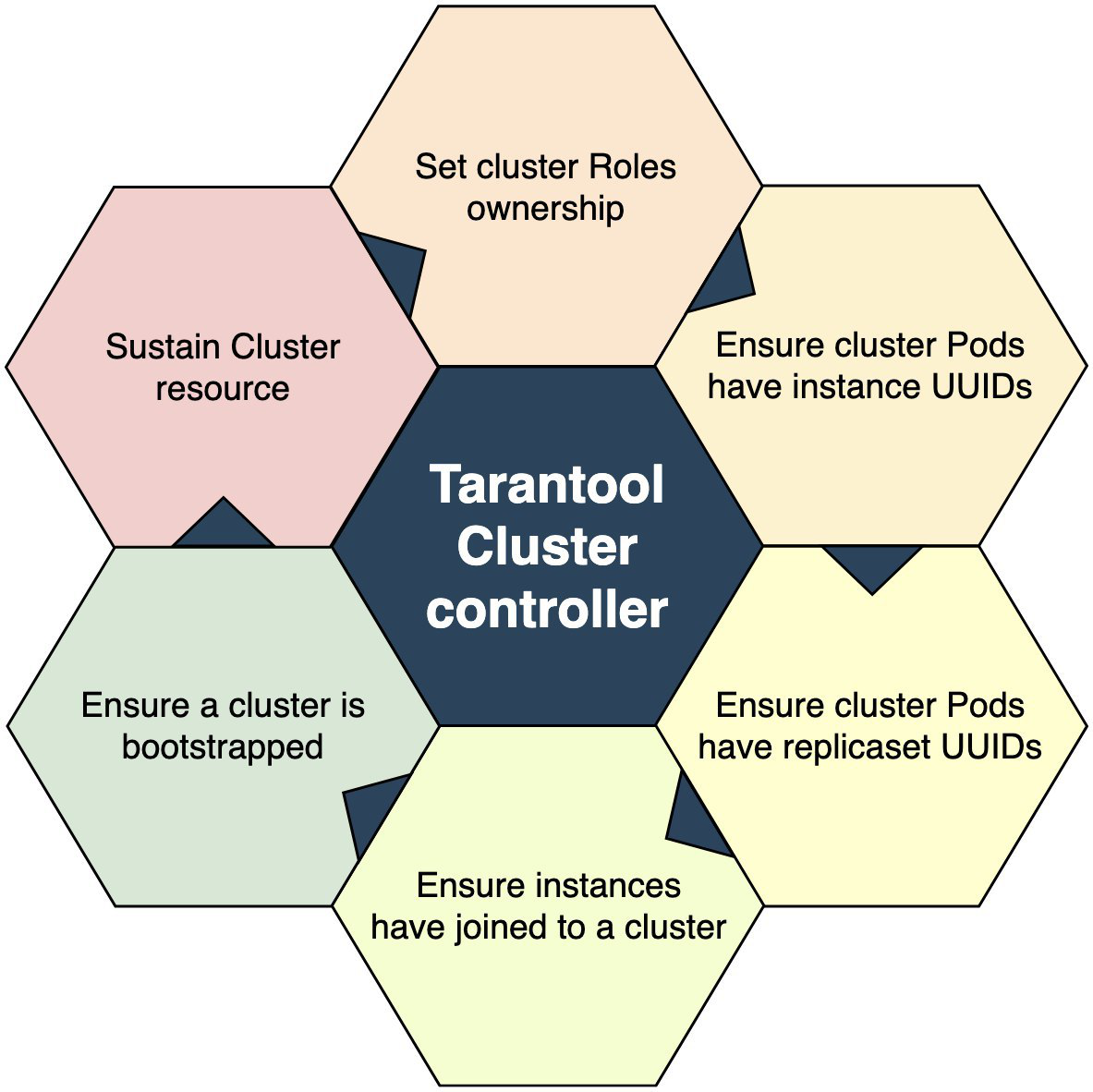

What is a controller like? It is a set of code that gradually puts the world around itself in order. A Cluster Controller would schematically look like:

An entry point is a test to see if a corresponding Cluster resource exists for an event. Does it exist? «No» means quitting. «Yes» means moving onto the next block and taking Ownership of the user roles. When the Ownership of a role is taken, it quits and goes the second time around. It goes on and on until it takes the Ownership of all the roles. When the ownership is taken, it’s time to move to the next block of operations. And the process goes on until the last block. After that, we can assume that the controlled system is in the defined state.

In general, everything is quite simple. However, it is important to determine the success criteria for passing each stage. For example, the cluster join operation is not considered successful when it returns hypothetical success=true, but when it returns an error like «already joined».

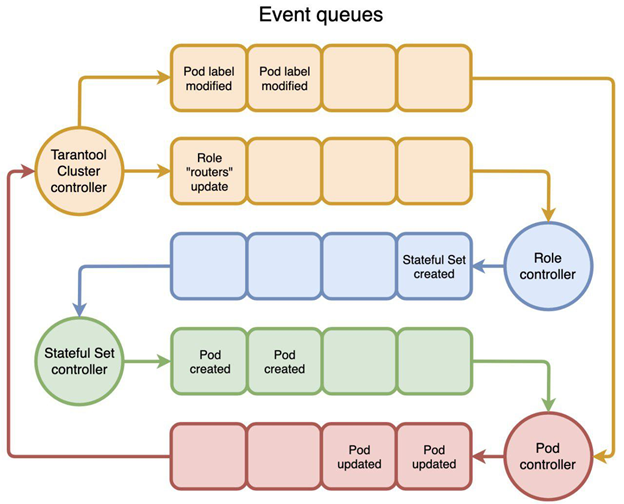

And the last part of this mechanism is the integration of the controller with K8s. From a bird’s eye view, the entire K8s consists of a set of controllers that generate events and respond to them. These events are organized into queues that we can subscribe to. It would schematically look like:

The user calls kubectl create -f tarantool_cluster.yaml, and the corresponding Cluster resource is created. The Cluster Controller is notified of the Cluster resource creation. And the first thing it is trying to do is to find all the Role resources that should be part of this cluster. If it does, then it assigns the Cluster as the Owner for the Role and updates the Role resource. Role Controller receives a Role update notification, understands that the resource has its Owner, and starts creating StatefulSets. This is the way it works: the first event triggers the second one, the second event triggers the third one, and so on until one of them stops. You can also set a time trigger, for example, every 5 seconds.

This is how the operator is organized: we create a custom resource and write the code that responds to the events related to the resources.

What the Operator Creates

The operator actions ultimately result in creating K8s Pods and containers. In the Tarantool Cartridge cluster deployed on K8s, all Pods are connected to StatefulSets.

Why StatefulSet? As I mentioned earlier, every Tarantool Cluster instance keeps a copy of the cluster topology and configuration. And every once in a while an application server has some space dedicated, for example, for queues or reference data, and this is already a full state. StatefulSet also guarantees that Pod identities are preserved, which is important when clustering instances: instances should have fixed identities, otherwise we risk losing the quorum upon restart.

When all cluster resources are ready and in the desired state, they reflect the following hierarchy:

The arrows indicate the Owner-Dependant relationship between resources. It is necessary, for example, for the Garbage Collector to clean up after the Cluster removal.

In addition to StatefulSets, Tarantool Operator creates a Headless Service for the leader election, and the instances communicate with each other over this service.

Tarantool Operator is based on the Operator Framework, and the operator code is written in Golang, so there is nothing special here.

Summary

That’s pretty much all there is to it. We are waiting for your feedback and tickets. We can’t do without them — it is the alpha version after all. What is next? The next step is much polishing:

- Unit, E2E tests;

- Chaos Monkey tests;

- stress tests;

- backup/restore;

- external topology provider.

Each of these topics is broad on its own and deserves a separate article, so please wait for updates!