DeepCode: Outside Perspective

Recently DeepCode, which is a static analyzer based on machine learning, began to support checking of C and C++ projects. And now we can find out the differences between the results of the classic and the machine-learning static analysis.

We have already mentioned DeepCode in the article «Machine Learning in Static Analysis of Program Source Code». Also recently, they have posted the article «DeepCode adds AI-based static code analysis support for C and C++» announcing support of C and C++ projects analysis.

In order to inspect the results of DeepCode analysis, I decided to check the PhysX project. I had no purpose here to present a review of the errors found by PVS-Studio or DeepCode in this project. What I wanted to do is to take a third-party project and look at its example how the analyzers would show themselves.

Running DeepCode analysis is extremely simple. Analysis of projects posted on GitHub runs automatically. Although there is a problem, since the entire repository was checked indiscriminately, despite it contained various projects, and warnings to them ended up being mixed up. Also, the analysis speed indicates that the code is not compiled and many errors might be hidden from the analyzer.

The overall result of DeepCode is as follows:

This does not quite reflect the number of issues, as they are grouped into one warning, as I will show later. Sure, C/C++ static analysis is a novelty in DeepCode and the work on it has just begun. Anyway, I think it is already possible to draw some conclusions.

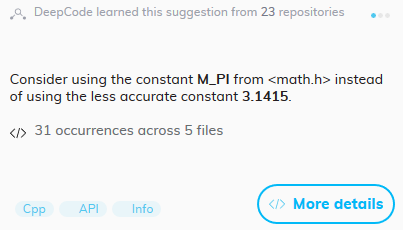

When I started reviewing DeepCode triggerings, I stumbled upon the following warning for the error, indicated by the analyzer as many as 31 times:

This warning caught my eye, since PVS-Studio had a similar diagnostic and, moreover, I’ve touched upon such a message in another article. When I faced with it for the first time, it seemed to me that very few people would write a mathematical constant manually.

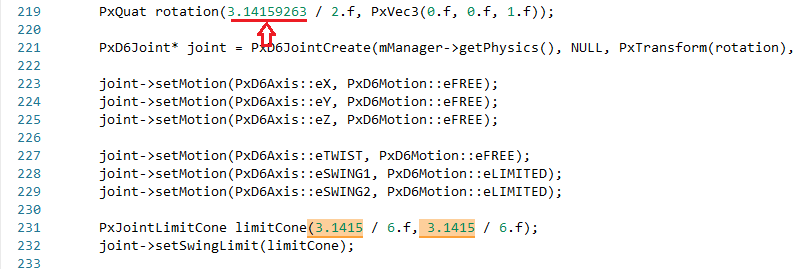

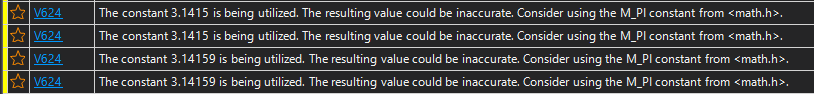

And PVS-Studio also found such careless use of constants, but there were no more 31 triggerings of this type, but 52. Almost immediately, I found a fragment where machine learning was inferior to the classic approach. If the error code at least somehow differs from the very typical error of this kind, it becomes much more difficult to find it. For classic static analysis, there is no need for the error to occur multiple times — it is enough for the diagnostic developer to come up with a general principle for the error’s occurrence.

Moreover, the PVS-Studio diagnostic triggers not only on the Pi number, but also on other frequently used constants, and even on specific ones (for example, the Landau — Ramanujan constant). It might be difficult to catch their erroneous usage even having a large learning base owing to their rare use.

Since DeepCode triggered precisely on a constant of the form 3.1415, for the sake of interest, I added 4 decimal places (3.14159263) to the constant in the code and the warning disappeared.

PVS-Studio triggers on various options for rounding constants.

Including there is a warning for the changed piece of code:

V624 There is probably a misprint in '3.14159263' constant. Consider using the M_PI constant from

And the point here is not that this is some kind of a terrible mistake / rough rounding / the possibility of making a typo, but that it confirms that learning on the code will be limited to this very code and if something gets out of the general pattern or appears only in rare cases, an error or a flaw can slip unnoticed.

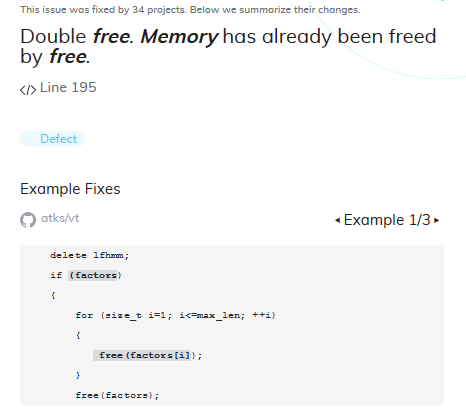

Let’s look at another interesting warning. DeepCode issued one warning for the following code:

bool Shader::loadShaderCode(const char* fragmentShaderCode, ....)

{

....

if (mFragmentShaderSource != NULL)

free(mFragmentShaderSource);

....

if (mFragmentShaderSource != NULL)

free(mFragmentShaderSource);

....

}

PVS-Studio had more complaints about this code:

- V809 Verifying that a pointer value is not NULL is not required. The 'if (mFragmentShaderSource!= 0)' check can be removed. Shader.cpp 178

- V809 Verifying that a pointer value is not NULL is not required. The 'if (mFragmentShaderSource!= 0)' check can be removed. Shader.cpp 194

- V774 The 'mFragmentShaderSource' pointer was used after the memory was released. Shader.cpp 194

- V586 The 'free' function is called twice for deallocation of the same memory space. Inspect the first argument. Check lines: 179, 195. Shader.cpp 195

You might think that DeepCode triggering simply turned out to be compact, and PVS-Studio produced a lot of unnecessary noise, but this is not so, and each warning here has its own meaning.

The first two warnings relate to excessive pointer checking, since such a check is not needed in order to use the free () function. The third warning indicates that it is unsafe to use the pointer after it is released. Even if the pointer itself is not dereferenced, but only checked, it is still suspicious and often indicates a typo. The last warning obviously points to the same problem that DeepCode spotted.

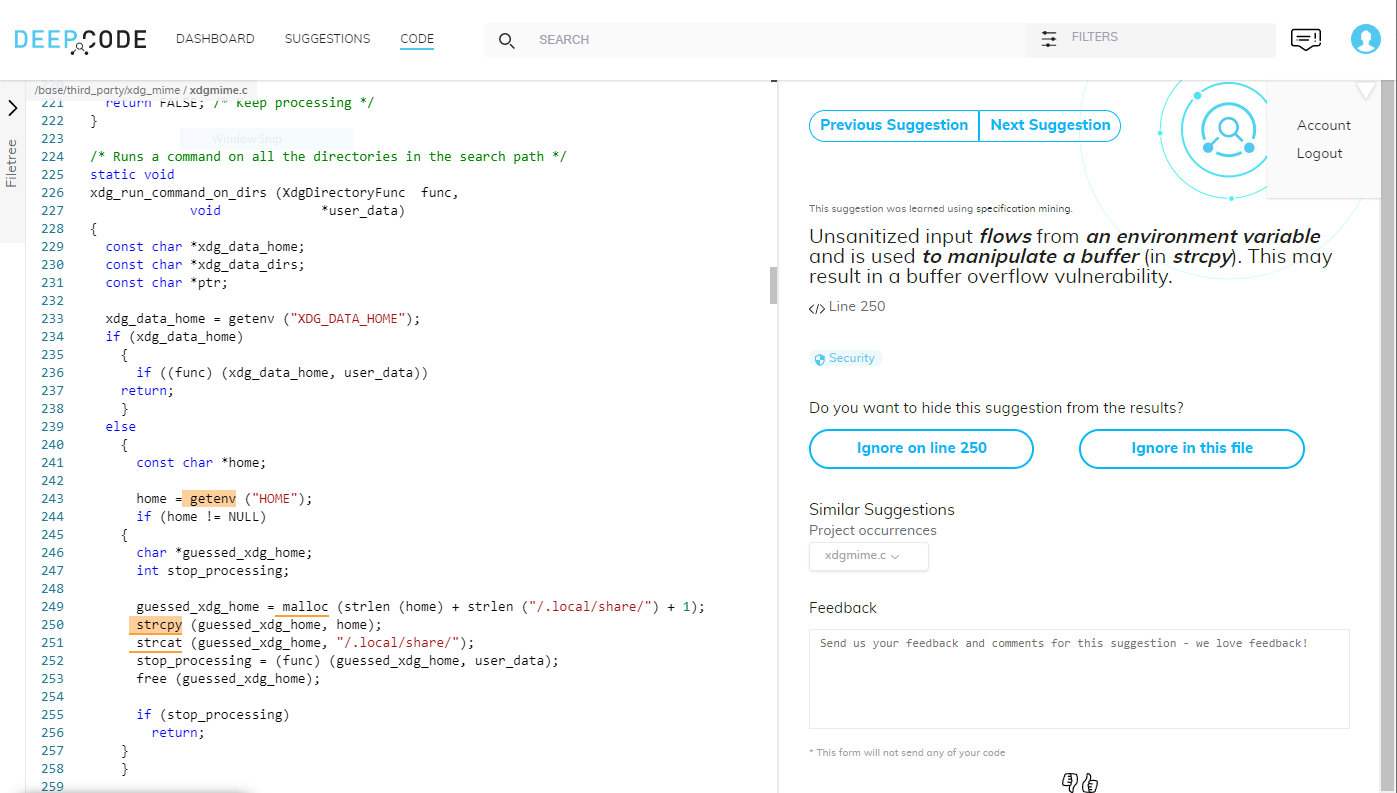

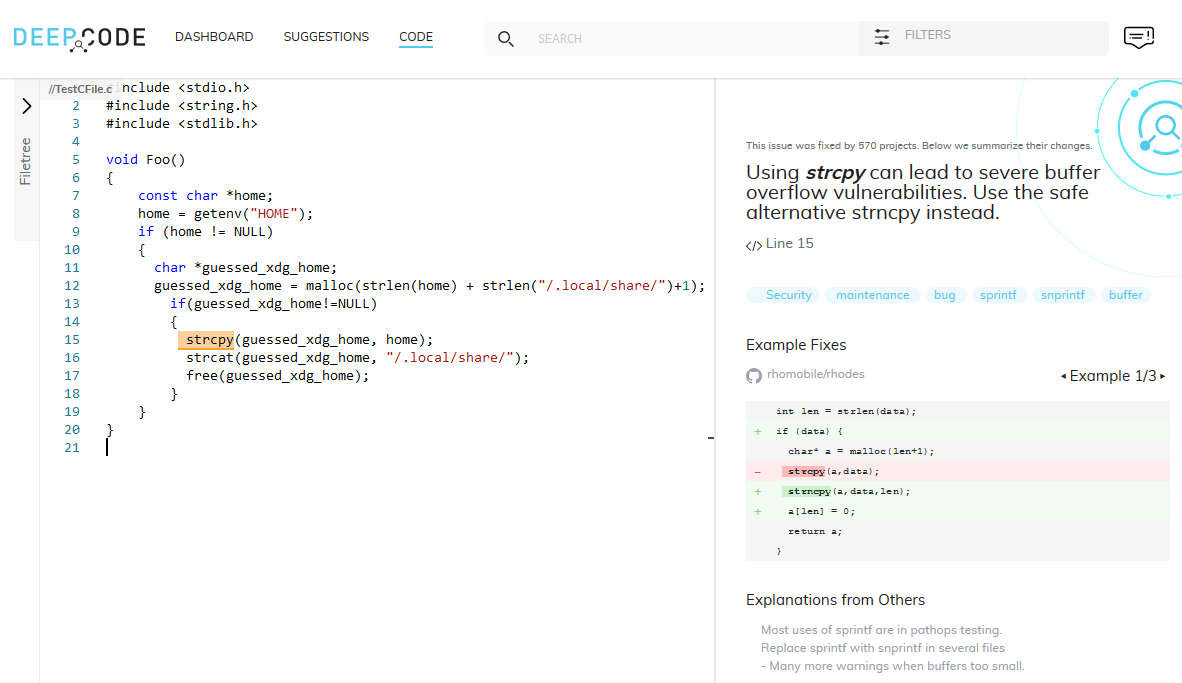

There was a screenshot in the DeepCode article about the beginning of C / C ++ support, which, as it seemed to us, contains a false positive. The warning indicates a possible buffer overflow, however, memory for the buffer is allocated taking into account the length of home and the length of the string, which is added + 1 further. Thus, there is enough buffer space for sure.

The problem here may be the lack of the check that the memory was successfully allocated. Perhaps, the DeepCode warning in this case speaks about something else: the first sentence of the message does not particularly help in understanding what the error is and what the analyzer actually complains about.

In this case, perhaps another factor that we wrote about plays out — the difficulty of creating meaningful warnings. Either warnings are written by people after warnings' classification or they are formed from comments to commits/documentation. But generating a good warning can be difficult if the error pattern was deduced through machine learning, and not by a developer.

I was wondering what has to be changed in this code so that the warning disappeared. But, strangely enough, on a completely similar section of code in a new file, such a warning did not occur, and another appeared.

Maybe the point is that the environment that the analyzer was oriented to has disappeared or I did something wrong. Nonetheless, unstable warnings, the fact that their appearance is unpredictable, can lead, in my opinion, to inconvenience not only for users, but also for the developers of the analyzer itself and complicate the development process.

In general, although most of DeepCode’s positives are not yet false, their number is so small that the result of its work at the moment is rather scarce than accurate. A small number of false positives is accompanied by a small number of positives in general.

Here are some interesting errors found by PVS-Studio.

Fragment N1

V773 The function was exited without releasing the 'line' pointer. A memory leak is possible. PxTkBmpLoader.cpp 166

bool BmpLoader::loadBmp(PxFileHandle f)

{

....

int lineLen = ....;

unsigned char* line = static_cast(malloc(lineLen));

for(int i = info.Height-1; i >= 0; i--)

{

num = fread(line, 1, static_cast(lineLen), f);

if (num != static_cast(lineLen)) { fclose(f); return false; }

....

}

free(line);

return true;

}

Here, in case the reading from the file is interrupted, a memory leak occurs, since the memory is not freed before returning from the function.

Fragment N2

V595 The 'gSphereActor' pointer was utilized before it was verified against nullptr. Check lines: 228, 230. SnippetContactReportCCD.cpp 228

void initScene()

{

....

gSphereActor = gPhysics->createRigidDynamic(spherePose);

gSphereActor->setRigidBodyFlag(PxRigidBodyFlag::eENABLE_CCD, true);

if (!gSphereActor)

return;

....

}

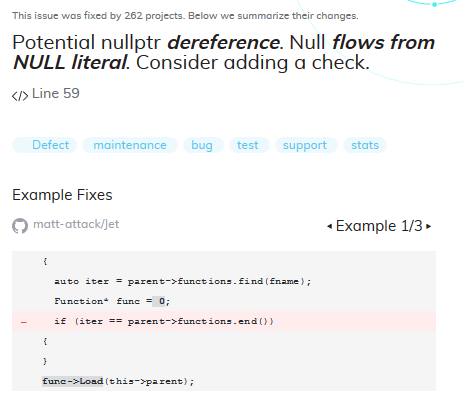

The gSphereActor pointer is dereferenced, but immediately after that it is checked for nullptr, and the function exits. That is, a null pointer is possible here, but dereferencing still occurs. There were 24 errors of this type in the PhysX project.

DeepCode issued only warnings for a specific type of cases (if i understood correctly), where the pointer was initially initialized by null, and there were no other assignments around. PVS-Studio didn’t trigger on such code, since most often such a warning will be false, because the pointer can get a value in another translation unit (most of the warnings related to global variables). This example shows that fewer number of false positives issued by the learned static analysis is not factually correct.

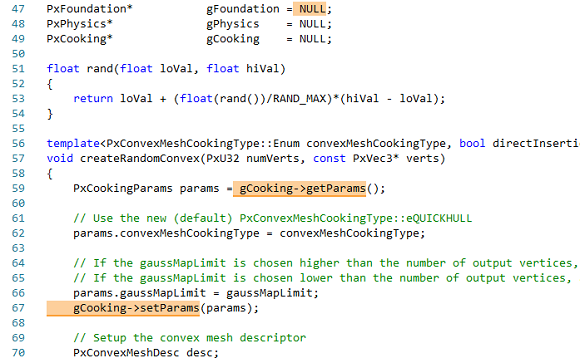

Here DeepCode, most likely, for some reason indicates a wrong initialization. Instead of initializing gCooking, the initialization of gFoundation is highlighted, although this pointer is no longer highlighted.

Fragment N3

V517 The use of 'if (A) {…} else if (A) {…}' pattern was detected. There is a probability of logical error presence. Check lines: 266, 268. AABox.cpp 266

bool AABox::initRT(int depthSamples, int coverageSamples)

{

....

int query;

....

if (....)

{

....

if ( query < coverageSamples)

ret = false;

else if ( query > coverageSamples)

coverageSamples = query;

....

if ( query < depthSamples)

ret = false;

else if ( query > depthSamples)

depthSamples = query;

}

else {

....

if ( query < depthSamples)

ret = false;

else if ( query < depthSamples) // <=

depthSamples = query;

....

}

....

}

Here it seems that the copy-paste error has crept in. The analyzer detected the same condition in if and else parts. From the previous if-else fragments we can see that »>» is normally checked in if and »else. Although the pattern looks extremely simple, I did not find such a warning among the DeepCode indications. One might wonder — what could go wrong with a very simple pattern to detect?

Conclusion

Needless to say, DeepCode has just announced support for C/C++ and this is a part of their product, which has only started to shape up. Still you can give it a go on your projects. There is a convenient feedback dialogue in case you have been issued a false positive.

However, even now we can see the downsides following from machine learning in static analysis. Therefore, we are skeptical of the idea of adding machine learning to static analyzers, since the gain is doubtful: the exact same analyzer support, writing or editing of documentation, and putting your mind to diagnostics themselves. In addition, problems encountered during development require more complex solutions and specific skills from developers, which reduces the number of potential candidates when expanding the team. Indeed, a developer in this case should both be a specialist in the analyzed language and know the insights of machine learning.